Synology C2 Object Storage

Since the launch of DSM 7, we have seen that Synology has been pushing its C2 plans with the rollout of new services and the opening of the new C2 data center in Taiwan.

Just last month, Synology presented their upcoming C2 Surveillance service that is still in beta until the end of October, but in a few months (sometime in July), we will get a new member of the C2 portfolio, C2 Object Storage.

C2 Object Storage - the unstructured data storage solution

So what is Object Storage? As advertised, it will be your solution for storing and backing up any unstructured data such as email, collaboration files, survey responses, social media content, search engine queries, IoT sensor data, etc.

On top of that type of data, you could be looking at images, audio/video files from media hosting, as well as the data from backing up VMs and running applications.

In order to tackle this demand of retrieving insight from a large portion of unstructured data, Synology is offering a way to store and manipulate that kind of data using their C2 platform. As with any other C2 service, when it comes to data manipulation (moving, deleting, accessing, etc), Object Storage will also have no hidden fees or egress costs so you have no fear of any unpredictable costs.

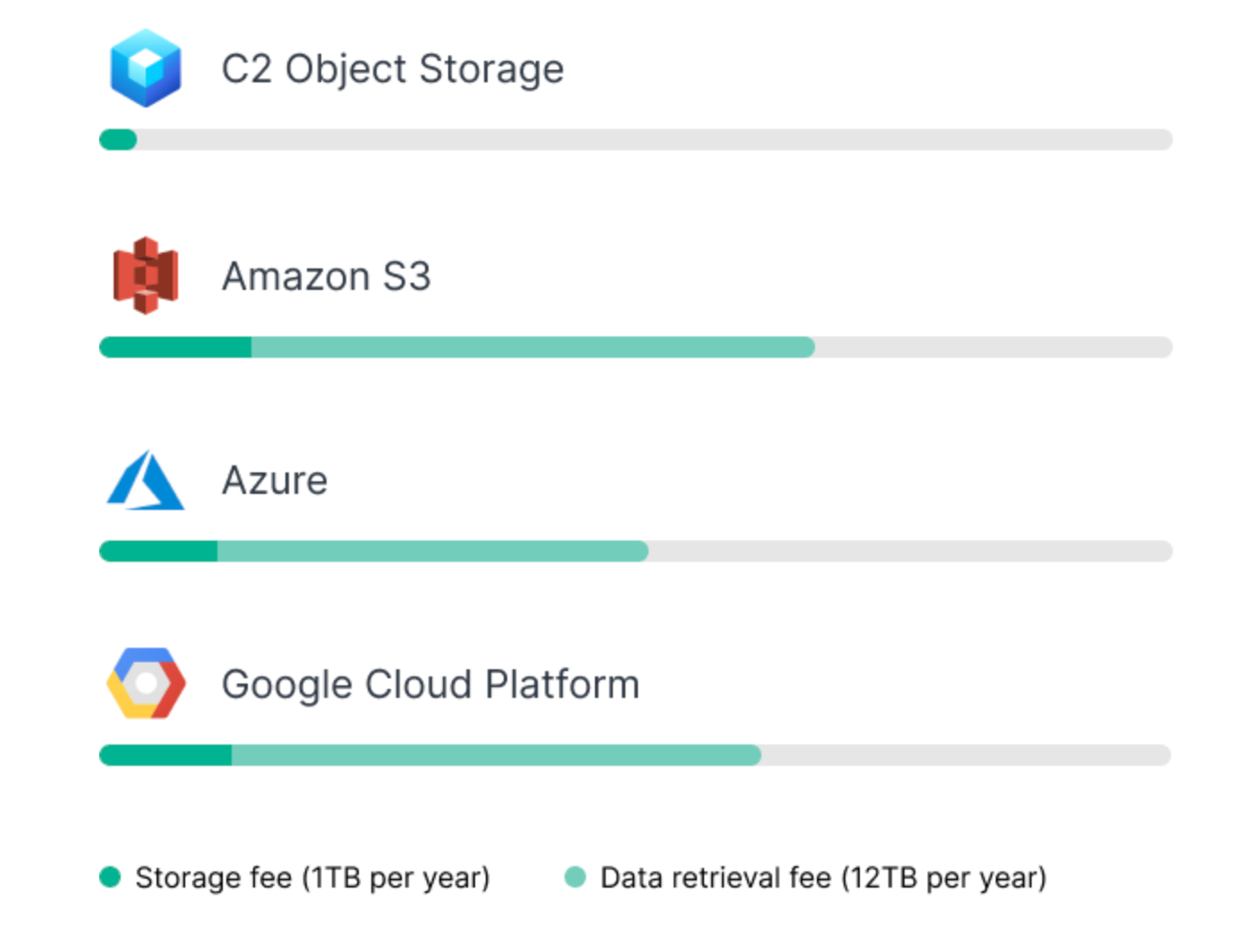

Starting at just $6.99 per month for 1TB, this price is already 3-4x lower than the likes of AWS or Azure.

Object Storage will support Amazon S3 compatible APIs, also with a free quota for download per month. Finally, the security and uptime element of this service is guaranteed by 11 nines of durability (99,999999999%).

At the moment, the service is in the early access stage, meaning it is invite-only. If you want to test it out, you are welcome to register for it using the following link.

Considering that Object Storage is compatible with Simple Storage Service (S3) APIs, you will be able to integrate it with existing tools and programming code. One such example would be Synology's Hyper Backup and its support for destinations that work with S3.

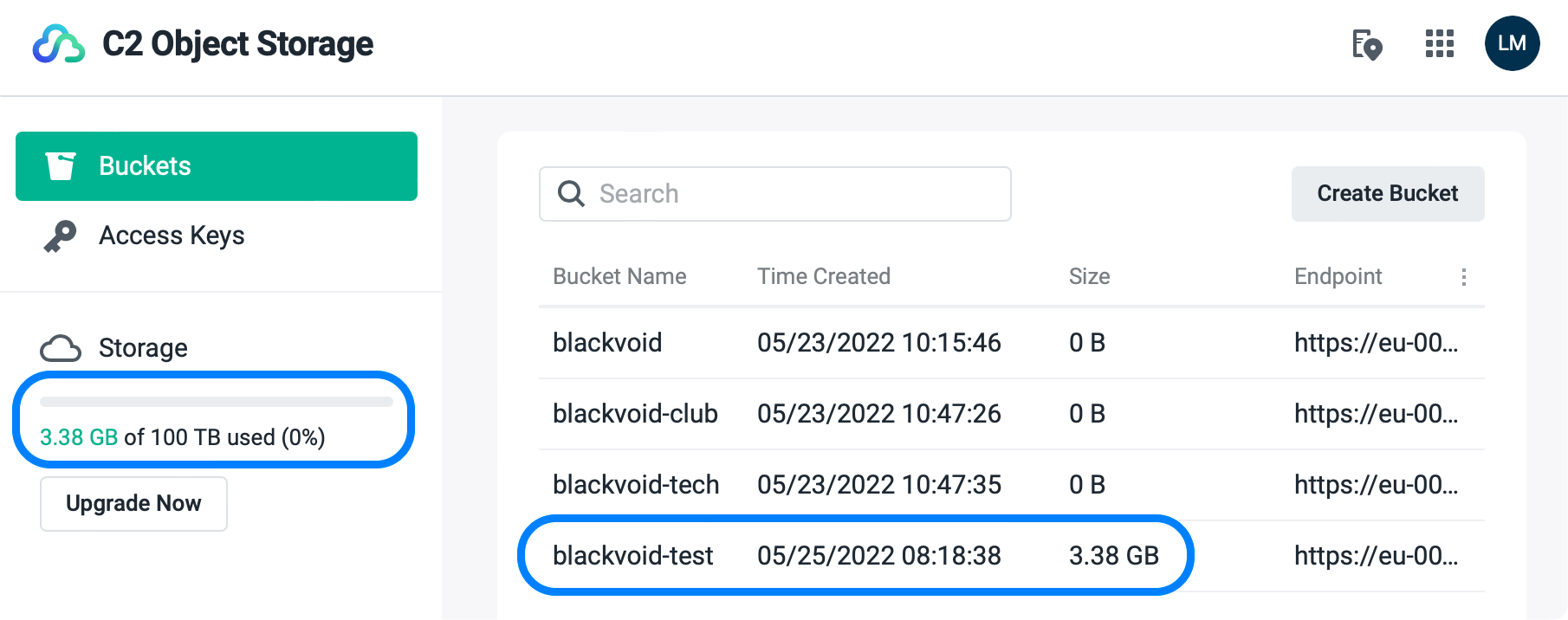

Service is working on the principle of buckets and keys, such as you would need when working with Amazon S3 or Backblaze B2 for example. You will have the option to create up to 1000 keys (with various permissions) that you can bind to all, or specific buckets (you can create up to 100 buckets) in order to increase security and minimize risk.

Each of the three major C2 data centers will have its own unique URL:

object.tw.c2.synology.com (APAC - Taiwan)

object.eu.c2.synology.com (Europe - Frankfurt)

object.us.c2.synology.com (North America - Seattle)

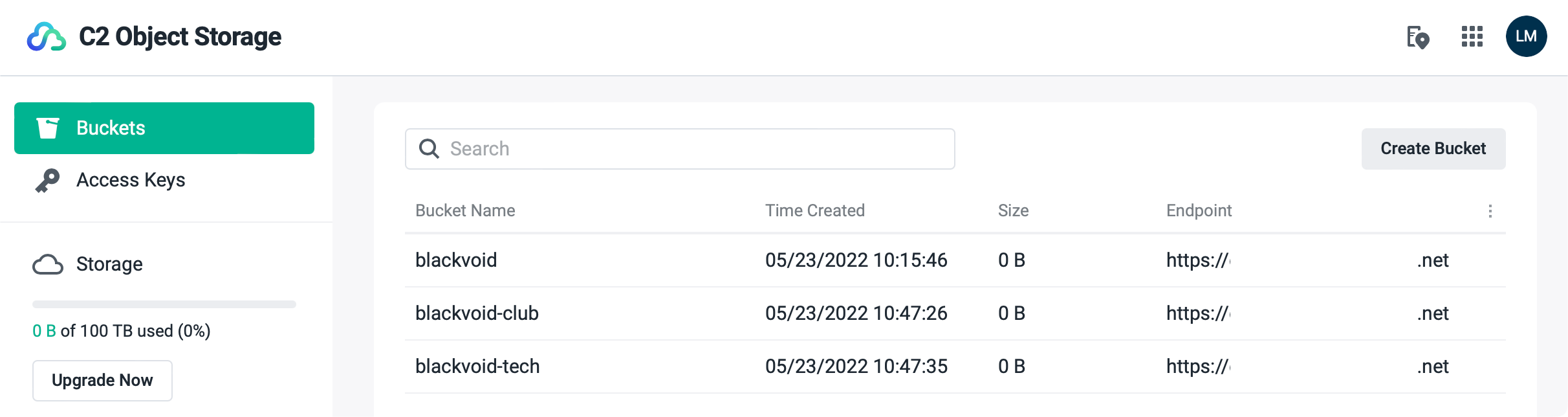

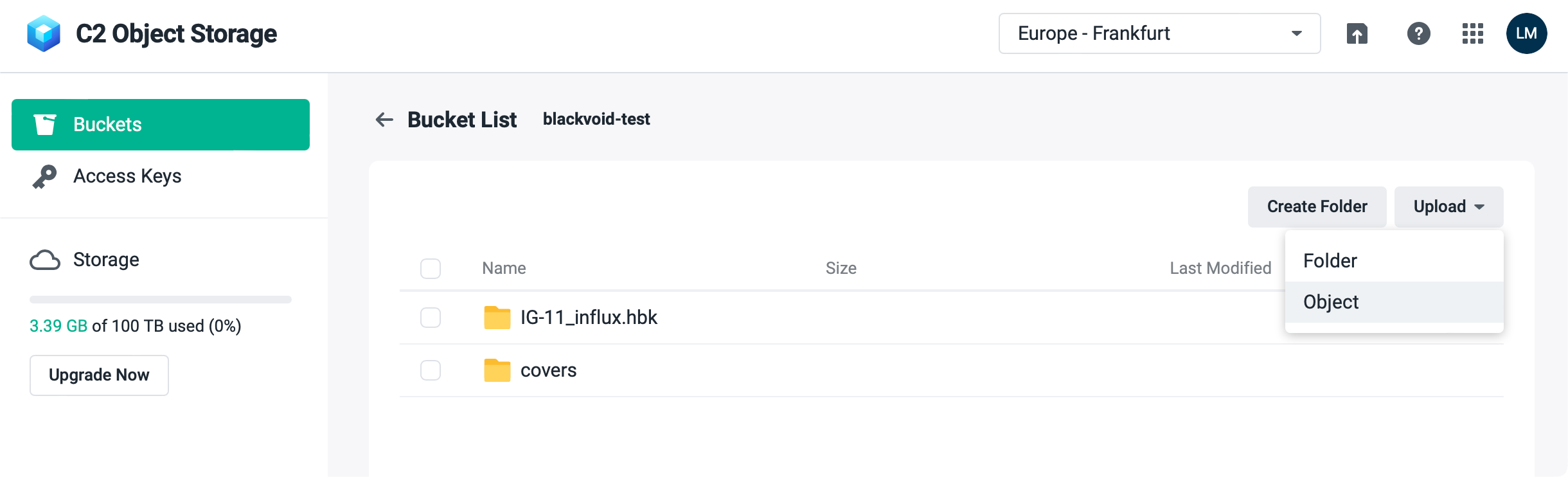

Managing buckets

Using the C2 Object Storage web UI, you can view, create, and delete buckets that are unique in Object Storage global directory (all C2 OS data centers). This means that you will only be able to use names that are not already in use by any OS user.

As mentioned before, you will be able to use up to 100 buckets, and all modifications can be done via web UI, not API.

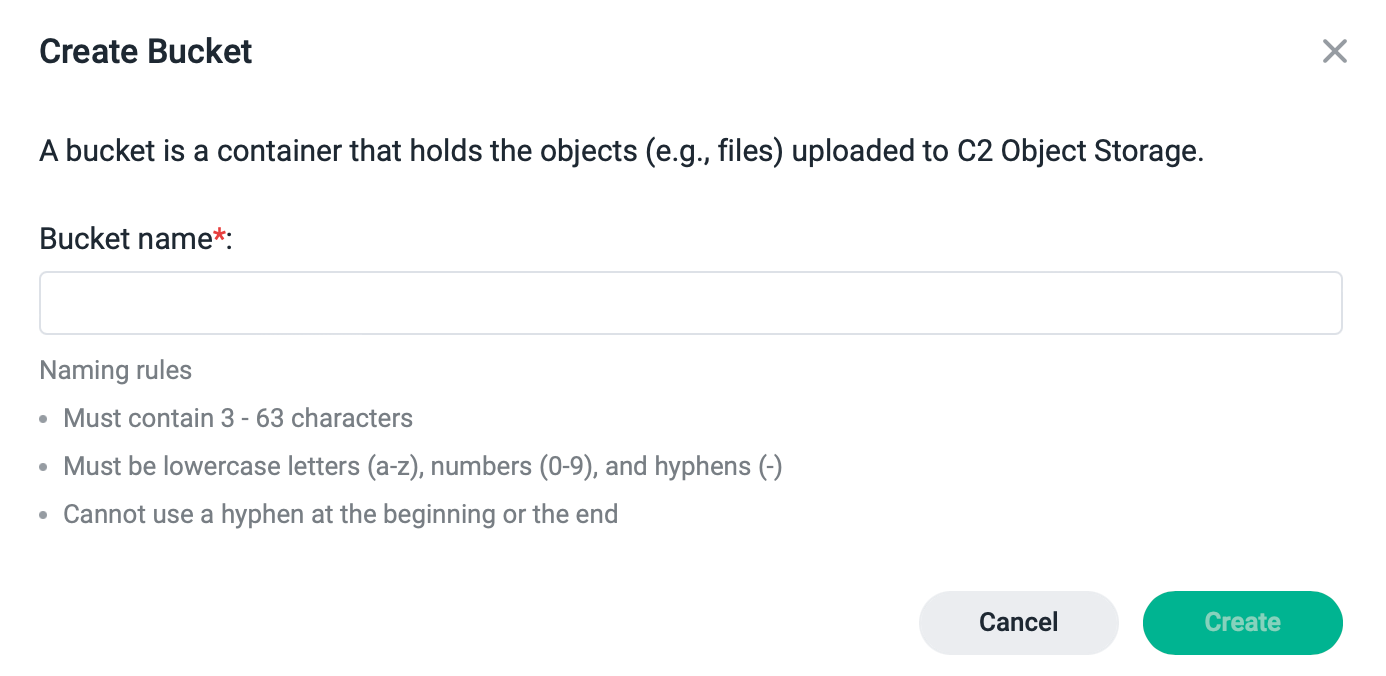

Setting the bucket name needs to follow certain naming rules:

- The length must be between 3 and 63 characters.

- Only lowercase letters (a-z), numbers (0-9), and hyphens (-) are accepted.

- Hyphens cannot be put at the beginning or at the end.

If you decide to delete the bucket, keep in mind that its name will become public and anyone else might claim it. If instead, you want to keep the bucket and its name, simply empty it instead.

After its creation, each bucket will get a unique name that will be similar to this: https://yy-xxx.xx.zzzzzzz.net.

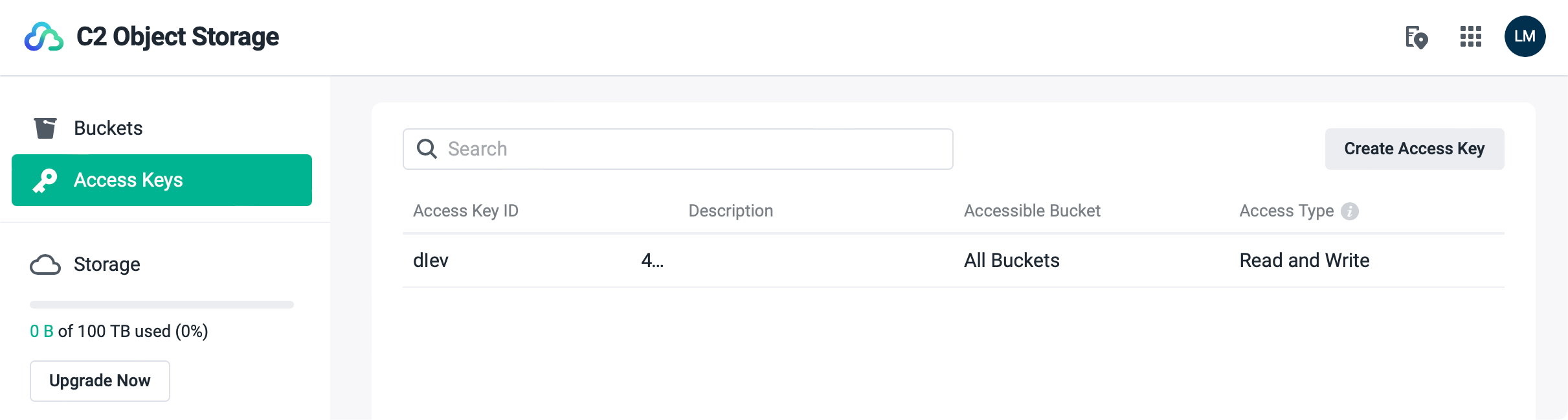

Managing keys

Along with the bucket endpoint URL, you will need to have an access key as well. Keys can be assigned to a specific bucket, or all of them, and can have read or read/write permissions.

Each key has two elements. Access key ID and a secret key. Once created, keys can be downloaded, and on top of it all, be sure to write down the secret key value, as you will not see it inside the UI after its initial presentation.

API compatibility and limitations

Considering that OS is compatible with Amazon S3 APIs, it has to be mentioned that some functions are currently not supported.

Here are the current compatible APIs:

Read buckets

- HeadBucket

- ListBuckets

- GetBucketACL

- GetBucketLocation

- ListMultipartUploads

Create objects

- PutObject

- Copyobject

- CreateMultipartUpload

- UploadPart

- AbortMultipartUpload

- UploadPartCopy

- CompleteMultipartUpload

Read objects

- HeadObject

- GetObjectACL

- ListParts

- ListObjects

- ListObjectsV2

Delete objects

- DeleteObject

- DeleteObjects

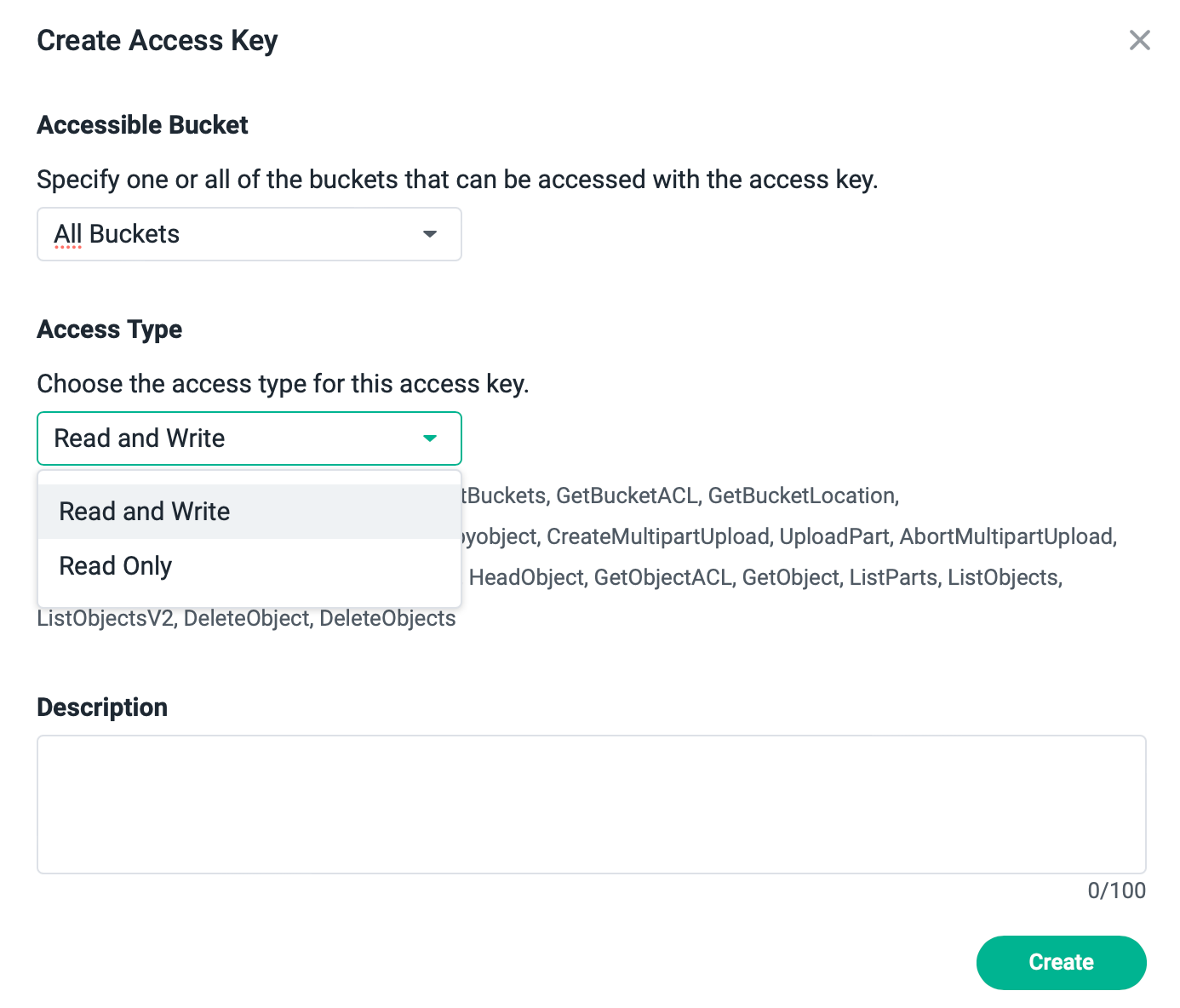

Available APIs by access types

Read Only

HeadBucket, ListBuckets, GetBucketACL, GetBucketLocation, ListBucketMultipartUploads, HeadObject, GetObjectACL, ListParts, ListObjects, ListObjectsV2, ListObjectVersions

Read and Write

HeadBucket, ListBuckets, GetBucketACL, GetBucketLocation, ListBucketMultipartUploads, PutObject, Copyobject, CreateMultipartUpload, UploadPart, AbortMultipartUpload, UploadPartCopy, CompleteMultipartUpload, HeadObject, GetObjectACL, ListParts, ListObjects, ListObjectsV2, ListObjectVersions, DeleteObject, DeleteObjects

Of course, these are all the supported functions but as stated before there are some that are not supported:

Unsupported functions

- ACL

Object-level ACLs1

Public buckets2 - IAM roles

- Bucket logging

- Server-side encryption

- Storage lifecycle rules

- Website configuration

- CORS

- Object locks

- Object tagging

- Versioning

- Creating, editing, deleting buckets and access keys through APIs3

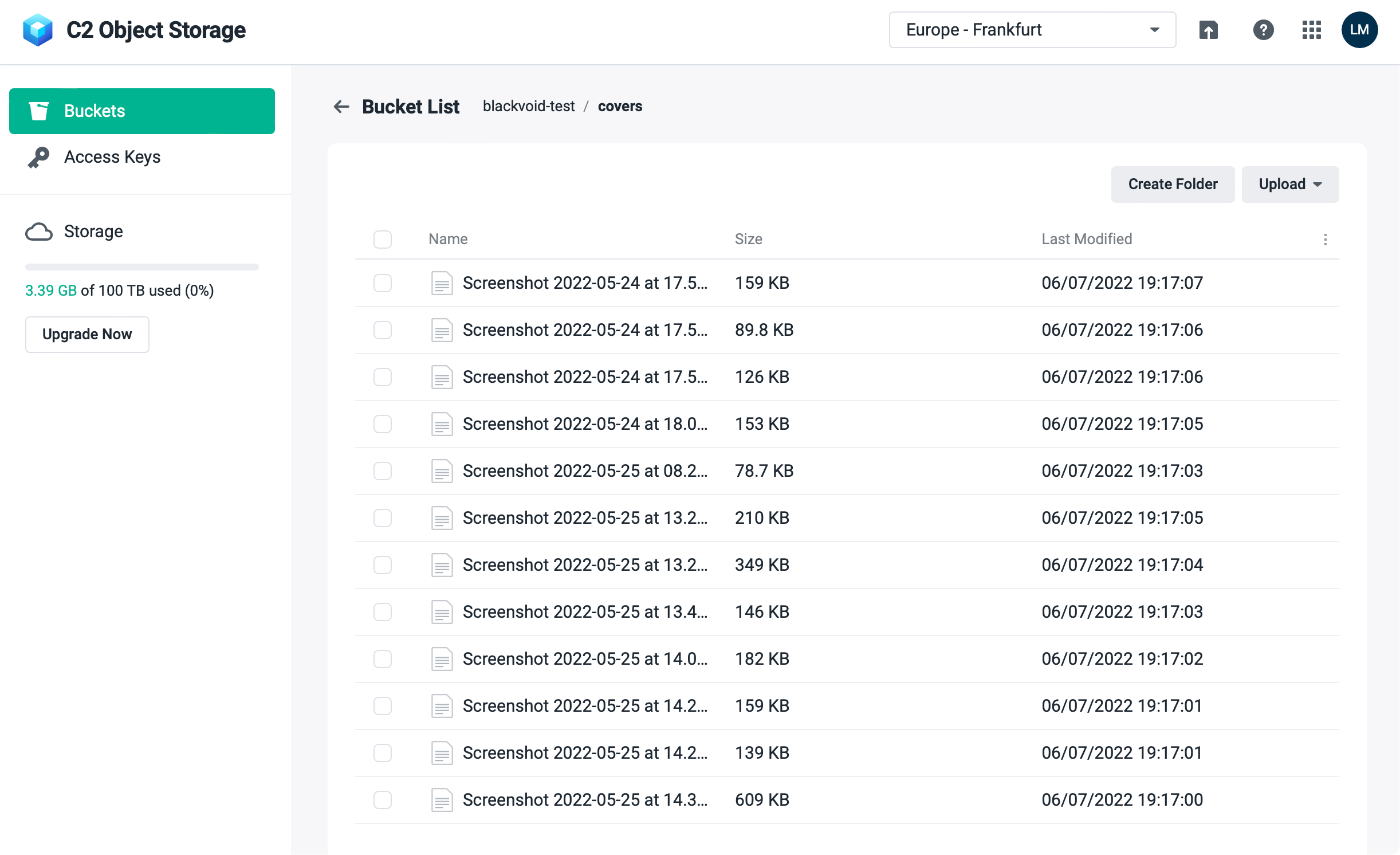

Object Storage user portal

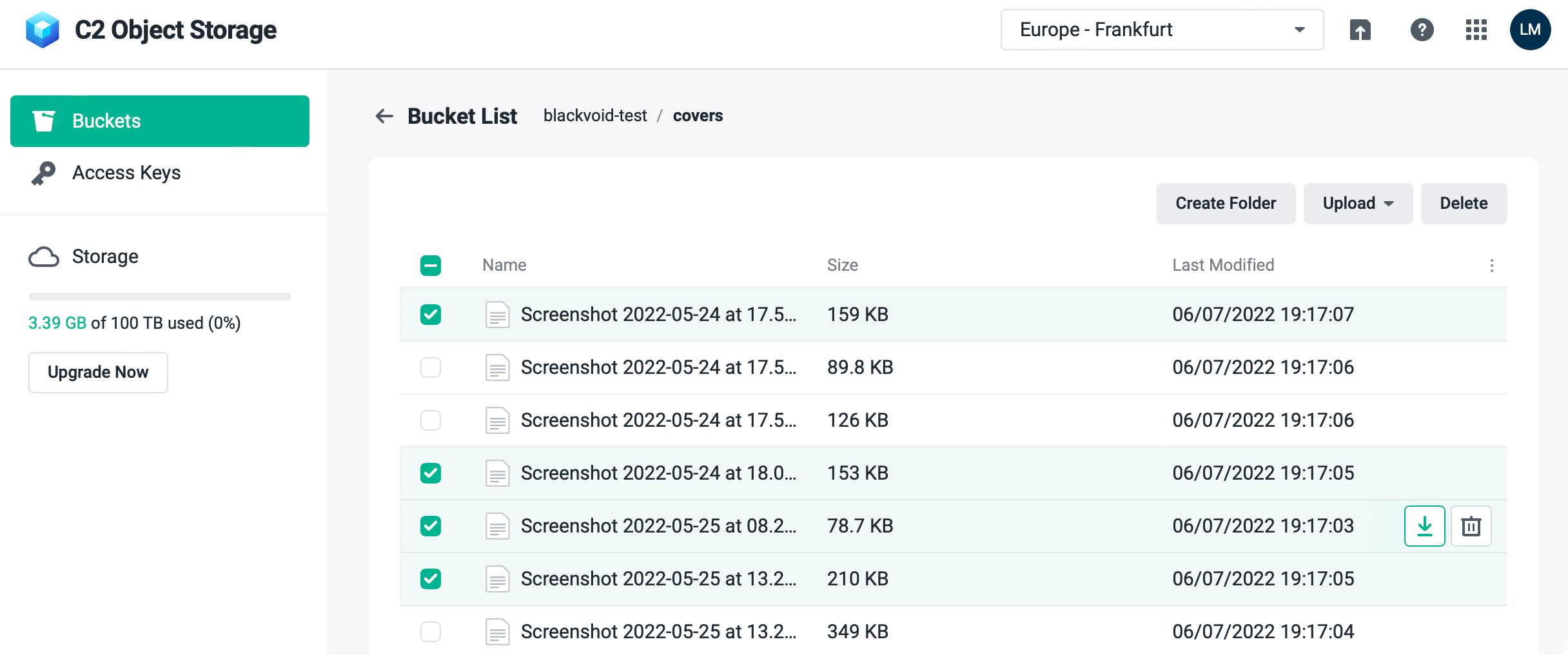

Once you log into the portal, besides creating buckets and keys, you will have the option to view, upload, delete and download files as well as use Synology or compatible 3rd party apps to manipulate the buckets and their data.

There is also an option to do multiple object operations (like deleting multiple files), as well as download a specific one if needed.

Hyper Backup example of using C2 Object Storage

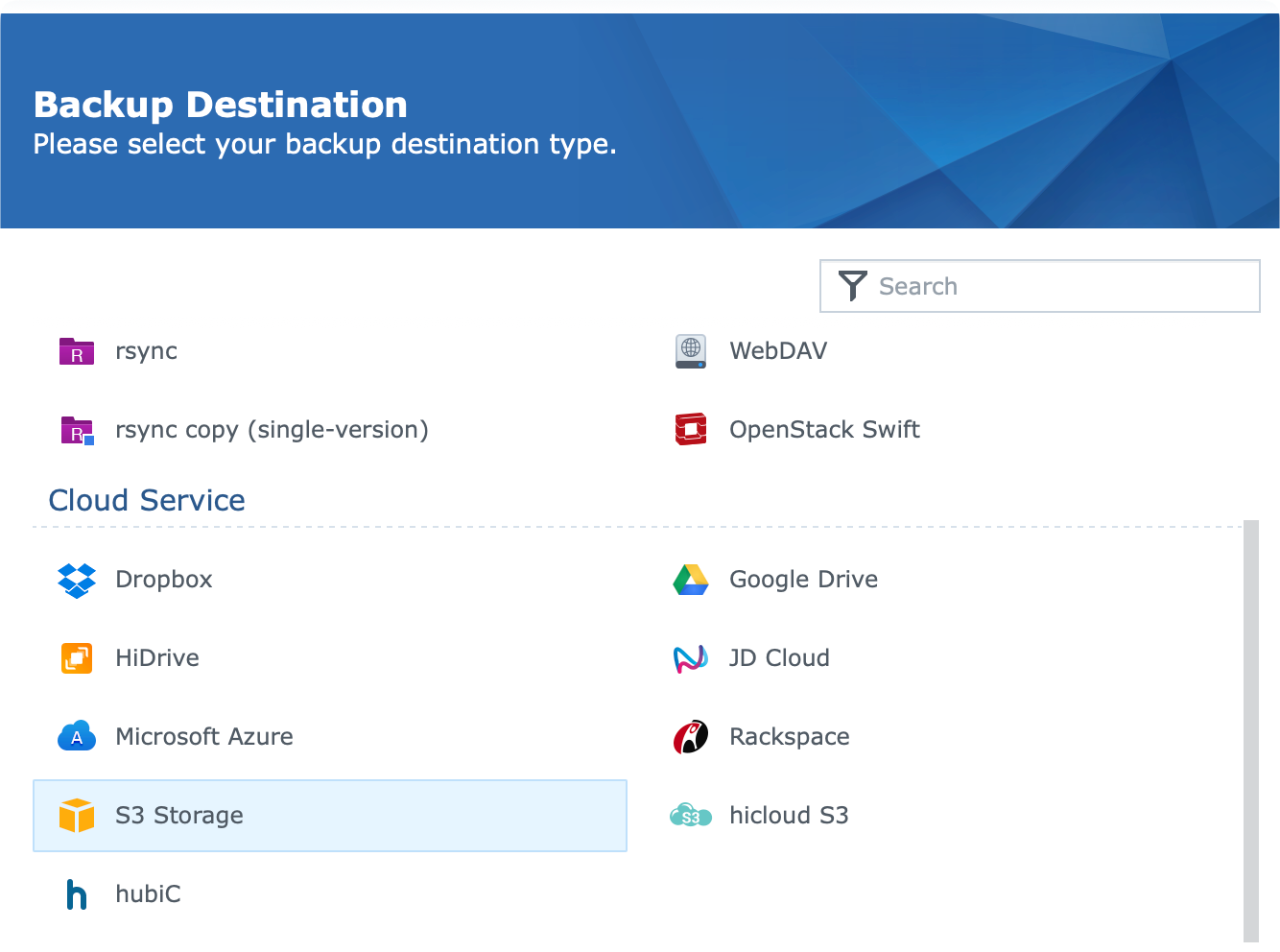

One example of how you can already use this service without developing custom code is to use the Hyper Backup platform and its option to connect to S3 services.

Once you start your Hyper Backup and select create Data backup task, select the S3 Storage option to proceed forward.

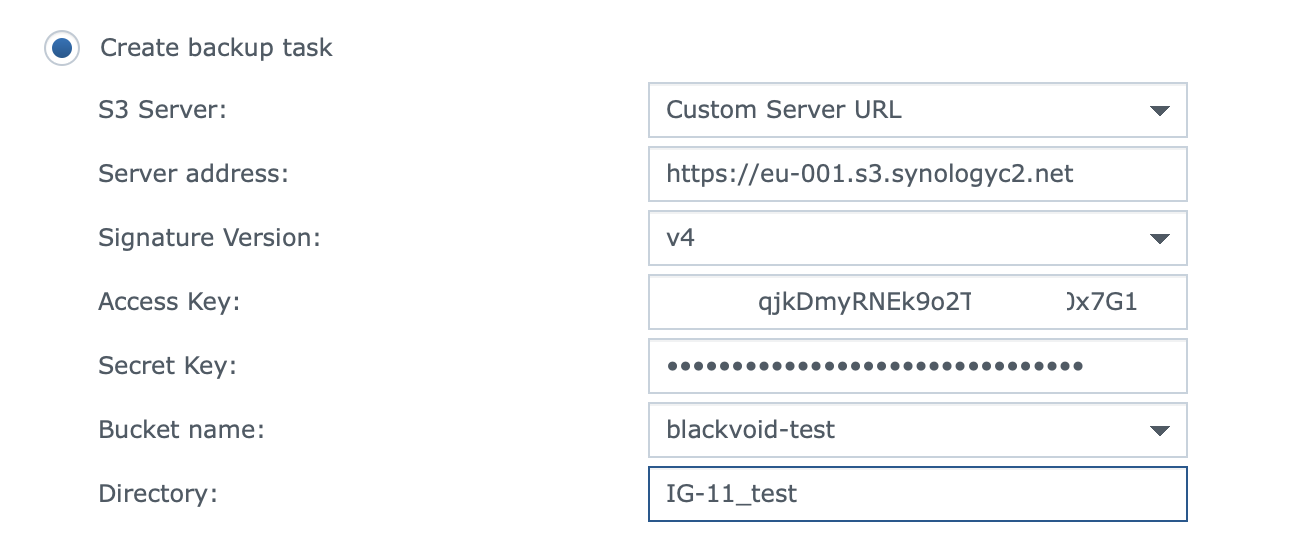

Be sure to change all the values as follows:

- S3 Server: change and select Custom Server URL

- Server address: paste in your end-point URL from the Object Share portal

- Signature version: v4 (v2 is not supported!)

- Access Key: your Object Share Access Key ID value

- Secret Key: your secret key

- Bucket name: select the bucket that your key has permission to

- Directory: name of the folder that will be visible inside the bucket

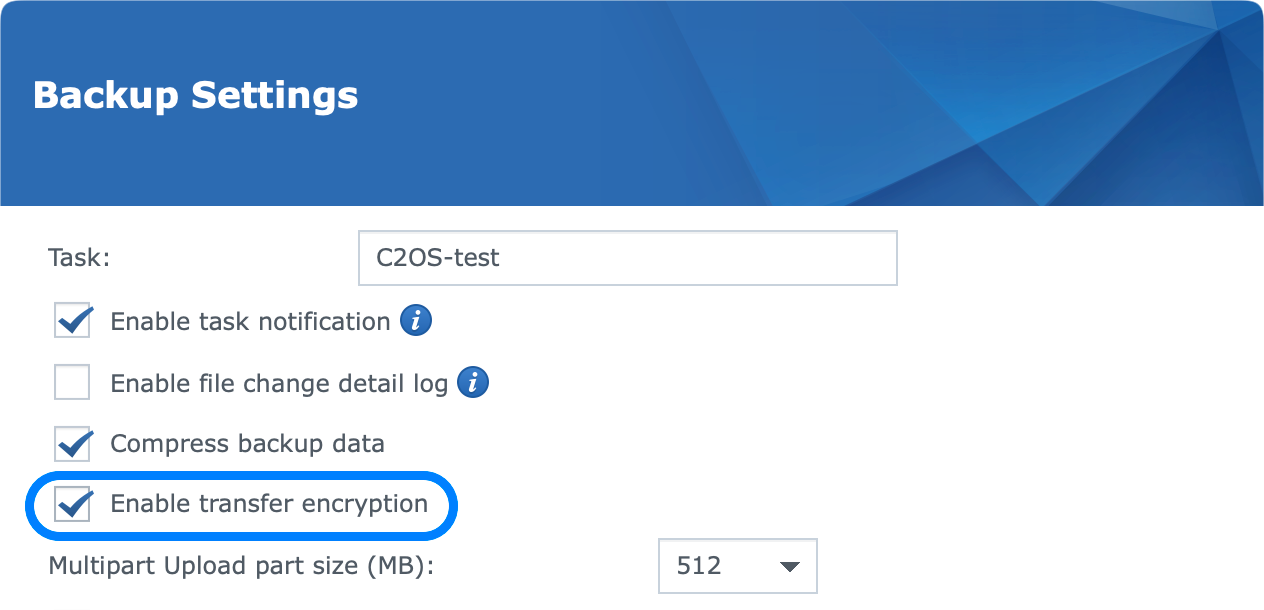

Once you select all the data you want to back up and land on the final page of the wizard, backup settings, you have to make sure to have enable transfer encryption setting turned on. If you fail to do so, then you will not be able to complete the wizard and initiate the actual backup.

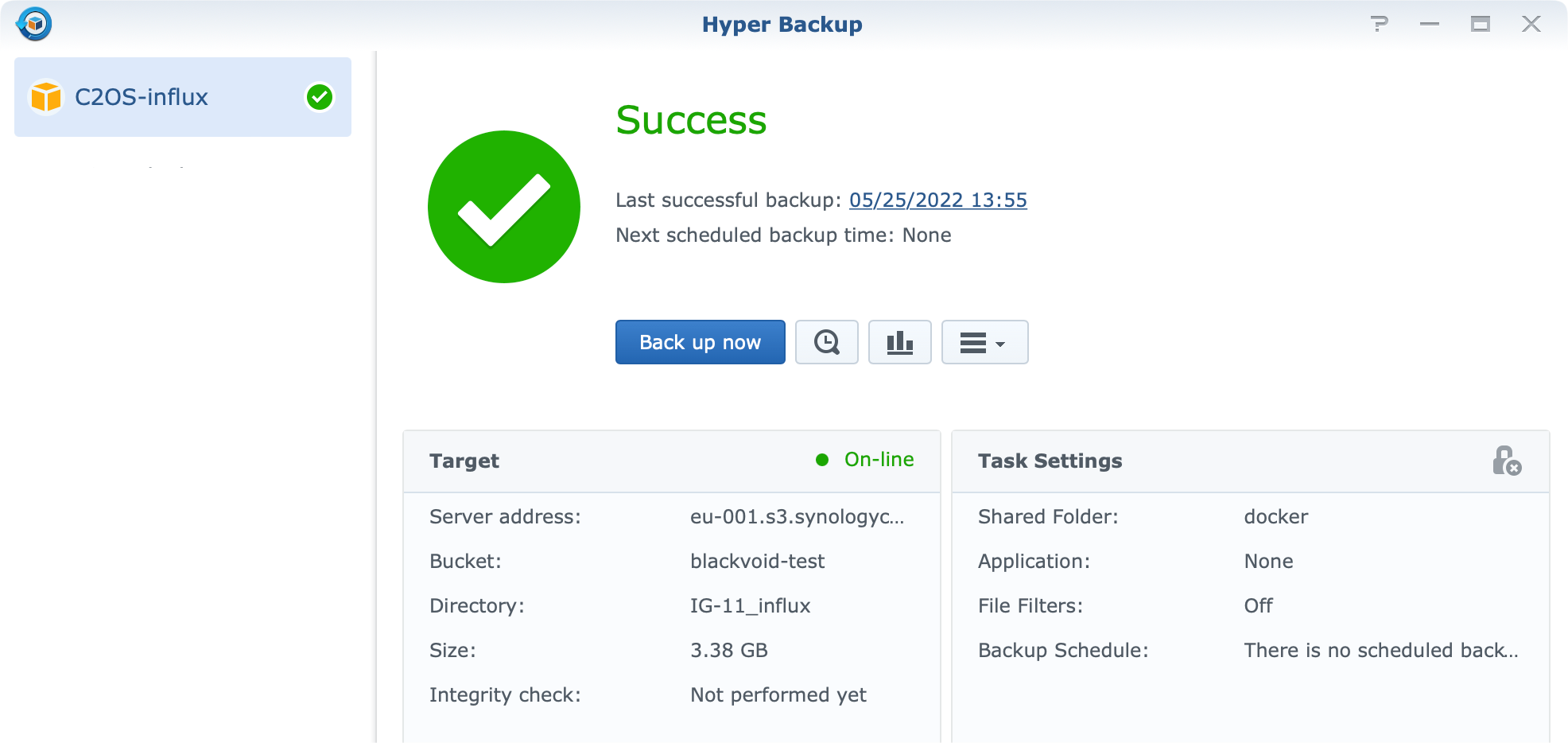

Finally, when the task runs you will have the classic Hyper Backup view of the process.

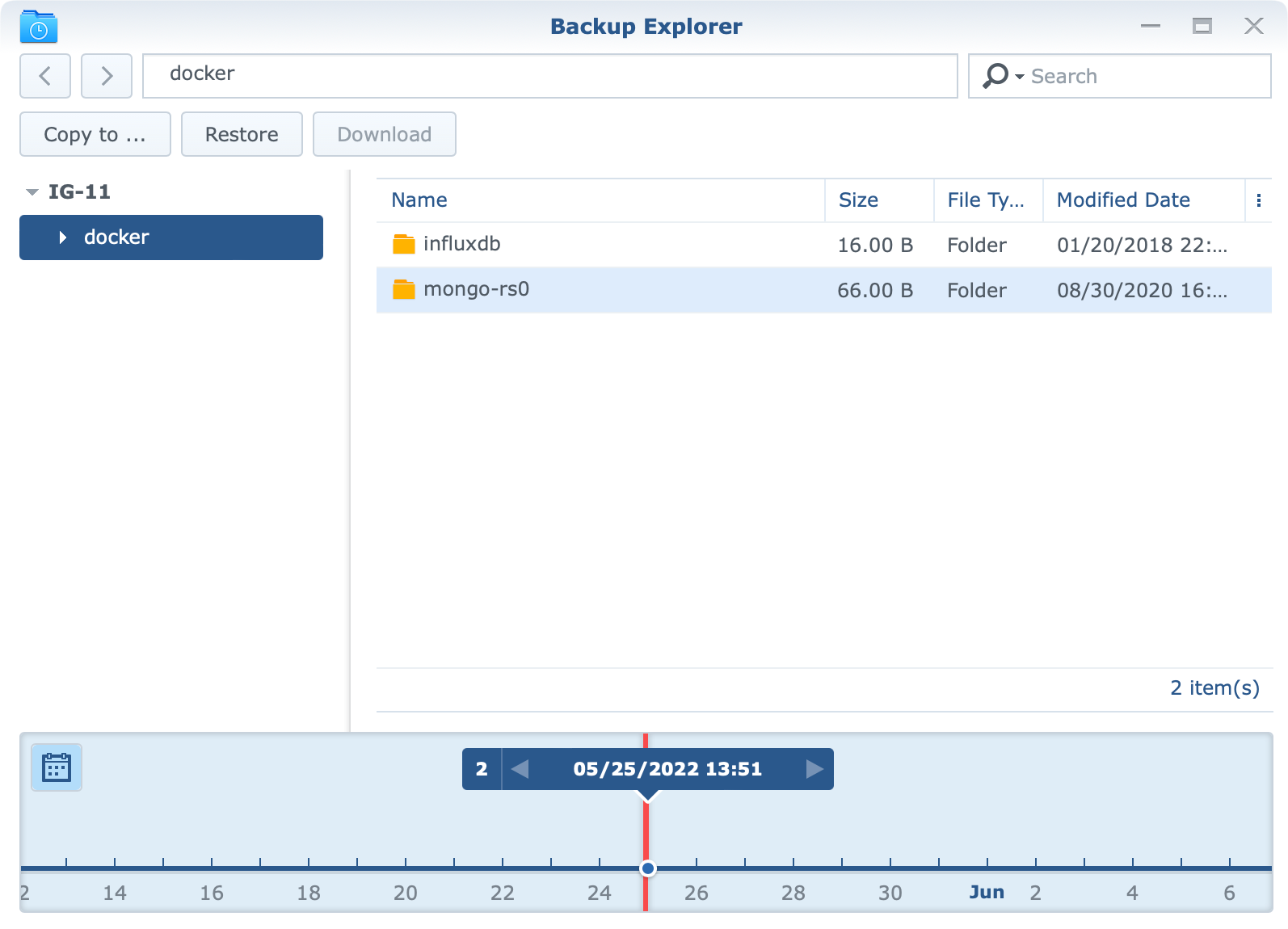

Accessing the data, or deleting it (at the moment) is not possible using the C2 Object Storage portal, you will only be able to see the space utilized (the feature is coming in near future), but if you are using Hyper Backup for the task, you can use the Hyper Backup Explorer, or Vault to view the content, and essentially pull it down or restore in place.

Speed and NAS utilization

When we are talking about performance from a standpoint of speed and NAS utilization, I can say the speed is decent and the impact of running these encrypted tasks will have a solid CPU utilization.

As the CPU graph shows there is a about 35% CPU load (yellow) that is directly showing the utilization of the Hyper Backup application. Over time the load is lowering as the data is nearing the end of the encrypted process.

Internet speed towards the C2 Object Storage (Frankfurt data center), was maxing at about 21MB/s (half of my total upload speed) as presented on the bottom graph. For a little under 3.4GB, it took about 4min to complete.

Conclusion

Considering the service is still in the closed invite-only phase, there might be a number of bugs that need to be ironed out, but saying that, for a few tests that I have done so far, I can say that it works well, especially with the tools that most Synology users are already familiar with, like Hyper Backup.

Alongside Amazon S3, and Backblaze B2 you now have an option to target Synology C2 storage as a backup destination by the means of S3 APIs. With the benefits mentioned at the start of this article, it would be worth looking into. One more great upside is that this service will work for any platforms that are not related to Synology at all. This means you do not have to own a Synology NAS to use Object Storage, making it a great destination (and cheap) for all your structured or unstructured data backup.

I'm sure Synology will continue to work on this C2 service to develop it further and bring other features to it in the near future.

If you have any questions or comments on the matter, as always, feel free to post in the comment section below.