Synology Extended Warranty Plus & Advanced RMA

Buying an appliance device of any kind, let alone a NAS that holds millions of personal or business files is expected to last for a long time and to arrive with a certain warranty period.

Most Synology devices come with a factory warranty of up to two (2) years or three (3) if the device is from a higher end of the spectrum, but on top of that, in April 2022, Synology introduced its EW+ or Extended Warranty Plus service.

Synology Extended Warranty Plus service article

Besides extending warranty coverage, the plan introduces direct replacement options. Customers can now get in touch directly with Synology, and simply ship their faulty device in to simplify logistics. Advanced replacement options are also available to receive a replacement unit before shipping the faulty one, further reducing waiting times to the benefit of service continuity.

With EW+, customers can add two (2) years of warranty as well as unlock direct and advanced RMA options. As already stated, advanced RMA allows customers to get a replacement unit before returning the faulty one to Synology. This speeds up the whole process dramatically, and there is no middleman in the entire chain, so end-to-end communication is done directly with the company.

Just a little over a year ago I introduced a new RS2423+ unit in my setup, as a replacement for one of my RS3614xs+. On top of that, just last month, I published an article about the whole experience of running with a Synology device and Synology hard drives.

One year with RS2423 and HAT3300 drives

Well as luck would have it, about two weeks ago I detected a potential issue with the device that eventually put me on an ARMA (advanced RMA) course with Synology. What follows is my experience throughout the whole process.

: The problem

The RS2423+ in question is an excellent, rock-solid device including the drives, so what happened was in no relation to that specific series of devices but something that is known to happen and needs to be addressed.

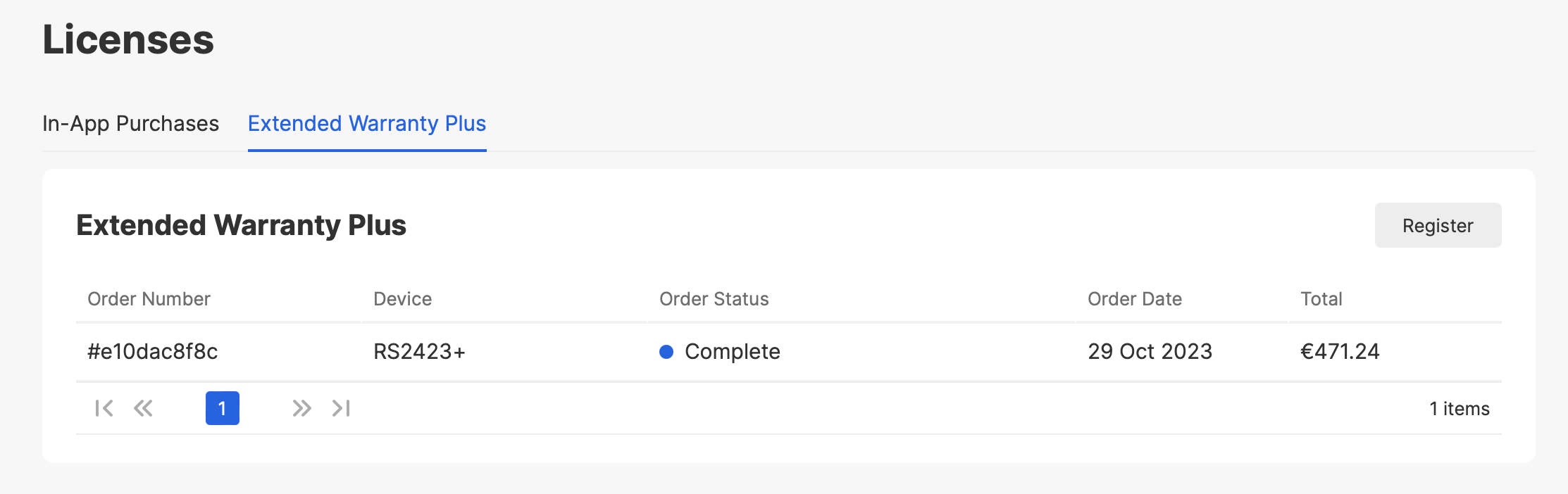

The device is one of my main production devices and as such has a lot of data on it, as well as a fair amount of services. Replacing it is not something that will go unnoticed, let alone be cheap (around €3000 at its current setup). The device has 3y warranty, but I have activated an additional EW+ to unlock two extra years of support and warranty, as well as advanced RMA.

With a price close to €500 (including local tax) for two years, it seemed like a good option for a device that can accommodate up to 200TB per volume.

Considering what happened a week ago, it was a good call in the end.

Following the events of the recent release of DSM 7.2.2. version, after about a week it was time to update the RS2423+. While no issues happened at any point in time for the past year it was a bit of a surprise. The device had an uptime of about 363 days (the last reboot was to apply the 7.2.1-UP1 patch) considering it is a production device and reboots are not common. Also, it is under UPS protection.

Other devices were already updated to the 7.2.2 patch, so what could go wrong right? To get to the 7.2.2 version from 7.2.1-UP1, I first had to update to UP5 before moving to 7.2.2. Simple enough. Not really.

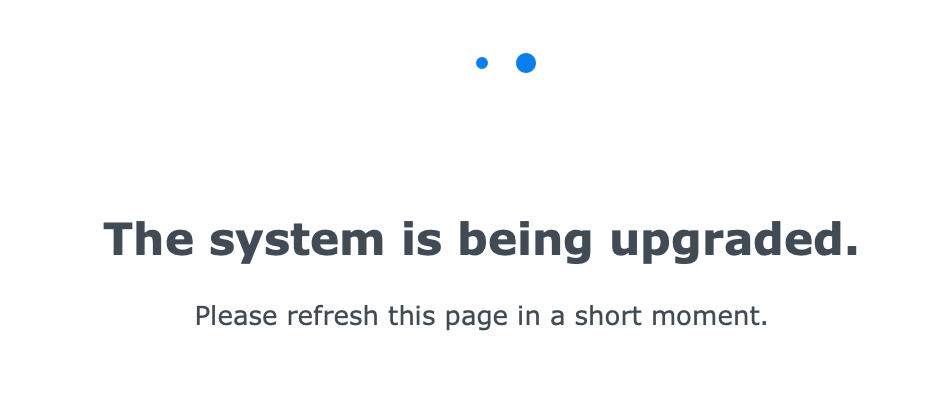

In the past 15y of using Synology devices starting with DSM 3.x I have never experienced the update progress bar (the "ring") being stuck at 1%. After about 10 minutes and a single refresh of the page, I noticed that the NAS did not reboot at all and the DSM was reporting a "The system is being upgraded" message.

What was interesting, is that the whole "shutdown" process has started. The containers started to shut down, other processes were turning off, and then the next moment started to boot up back without a usual DSM reboot.

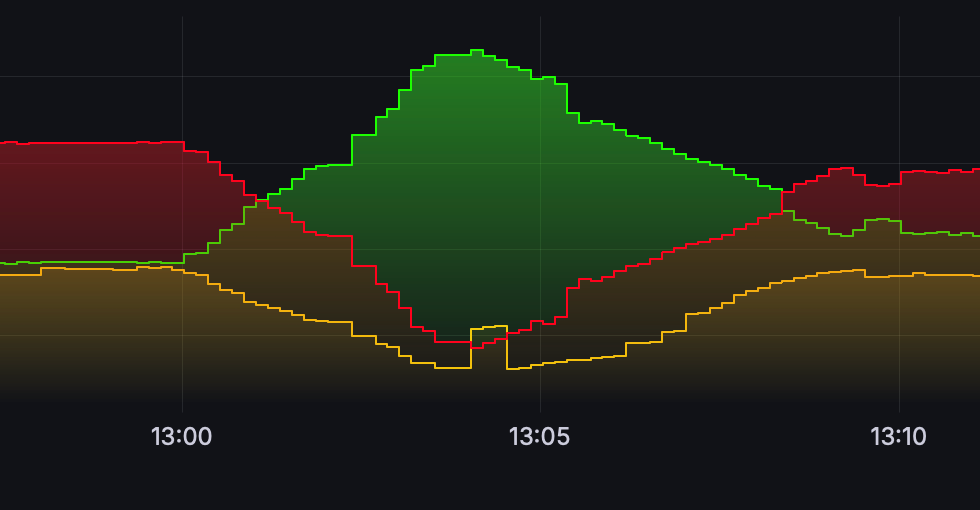

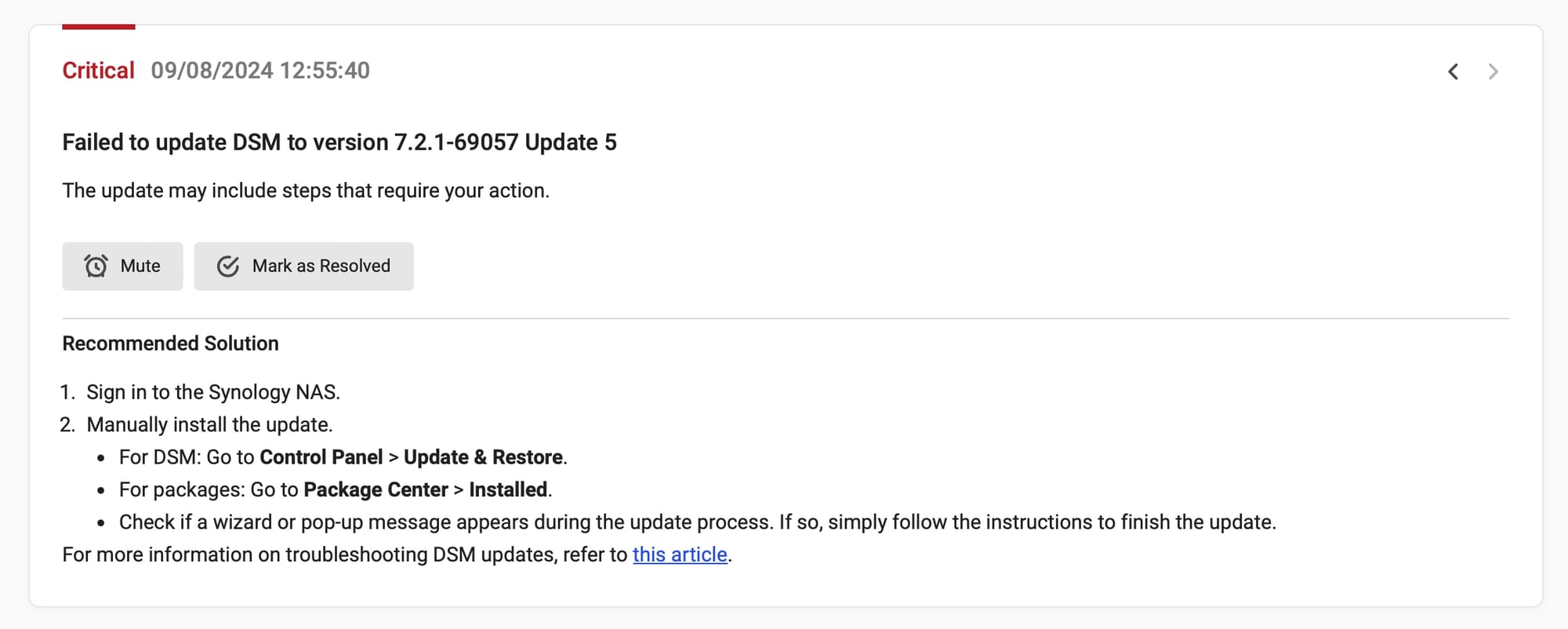

The upgrade process started at 1 PM and for the next four minutes, it was turning services off, as indicated by the red graph falling and green rising (releasing used RAM). As soon as that was completed, the processes reversed and the same services started to boot back up. After about 12 minutes total from the start, I got a warning from Active Insight that the process failed, and DSM was back to its old self.

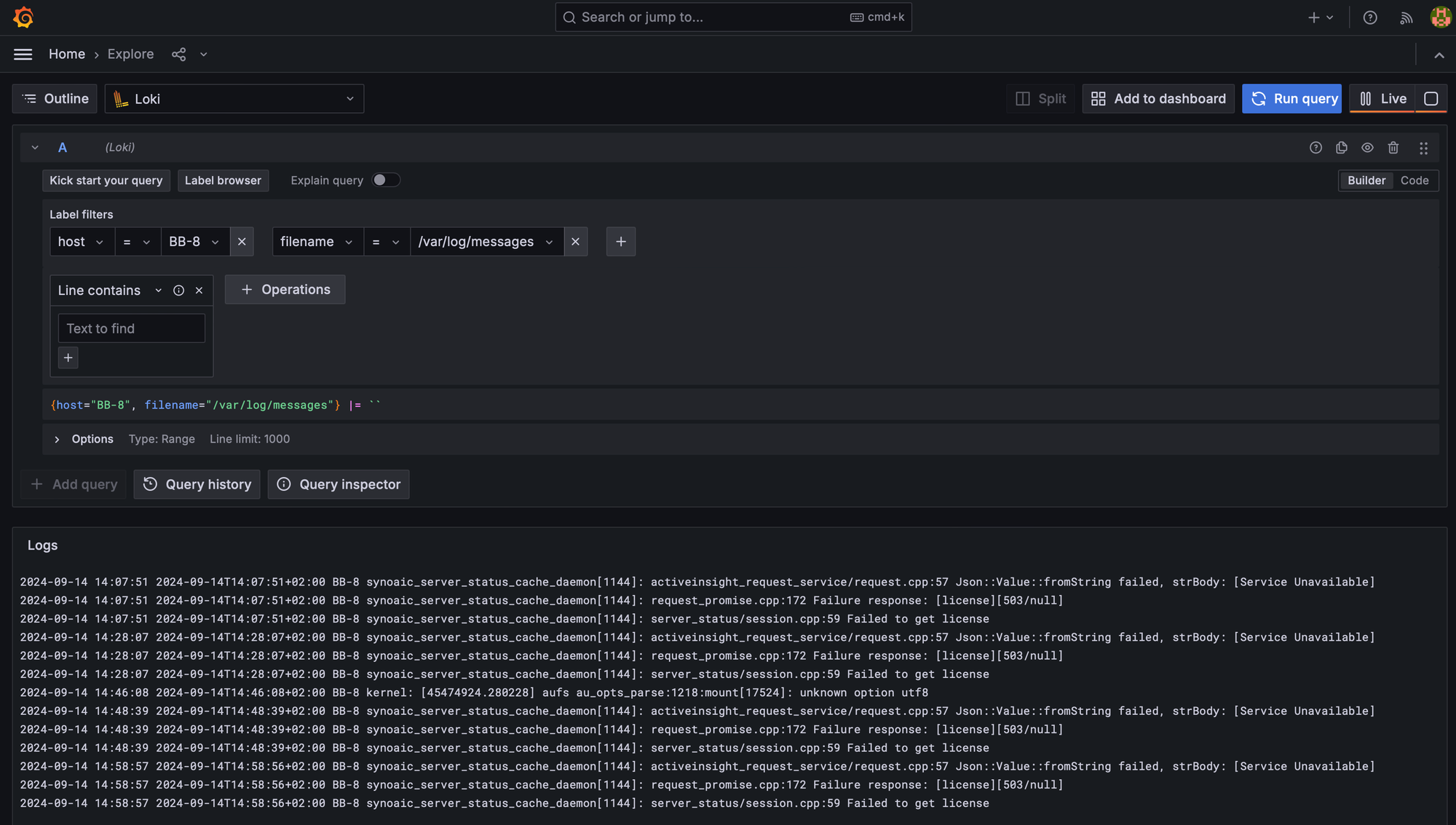

The process was repeated once again as I was still not sure what happened, and of course, everything played out exactly the same. Time to dig up the logs. The main and first log that I wanted to check out was the messages file inside /var/logs folder. For anyone unfamiliar with it, we can access the said file via ssh as root. Another way to do it is via various log parsers, such as Loki by the Grafana team.

Loki, log aggregation platform

Checking the said file, resulted in the following error:

2024-09-08T14:11:23+02:00 D-0 updater[4054]: updater.c:5977 Start of the updater...

2024-09-08T14:11:23+02:00 D-0 updater[4054]: updater.c:6283 ==== Start flash update ====

2024-09-08T14:11:23+02:00 D-0 updater[4054]: updater.c:6287 This is X86 platform

2024-09-08T14:11:24+02:00 D-0 updater[4054]: boot/boot_lock.c(259): failed to mount boot device /dev/synoboot2 /tmp/bootmnt (errno:2)

2024-09-08T14:11:24+02:00 D-0 updater[4054]: updater.c:5577 Failed to mount boot partition

2024-09-08T14:11:24+02:00 D-0 updater[4054]: updater.c:3191 No need to reset reason for v.69057

2024-09-08T14:11:24+02:00 D-0 updater[4054]: updater.c:6897 Failed to accomplish the update! (errno = 21)

2024-09-08T14:11:24+02:00 D-0 smallupd@ter[4049]: proc_service/exec.cpp:30 exec '/var/tmp/[email protected]/updater' failed: [0x0000 (null):0]

2024-09-08T14:11:24+02:00 D-0 smallupd@ter[4049]: private/encrypted/smallupdate_updater.cpp:52 failed to exec updater at '/var/tmp/[email protected]/updater', with args: -qir, /, [NULL] and [NULL]

2024-09-08T14:11:24+02:00 D-0 smallupd@ter[4049]: small_update.cpp:219 Failed to apply smallupdate (flash)

2024-09-08T14:11:24+02:00 D-0 update-entry[4023]: proc_service/exec.cpp:30 exec '/smallupd@te/smallupd@ter' failed: [0x0000 (null):0]

2024-09-08T14:11:24+02:00 D-0 update-entry[4023]: private/encrypted/smallupdate.cpp:204 update phase 'flash' is failedError output from the messages log

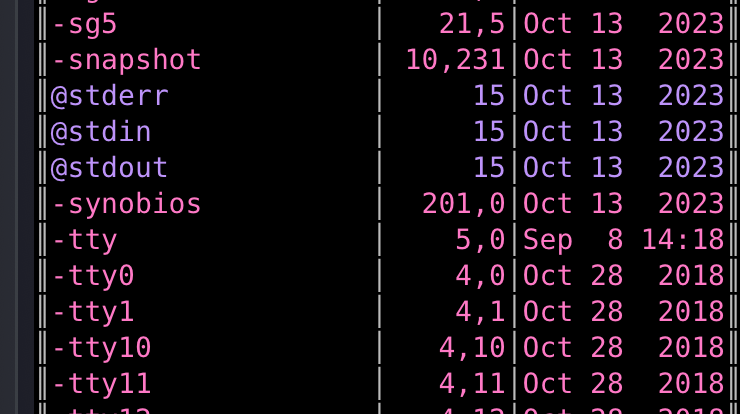

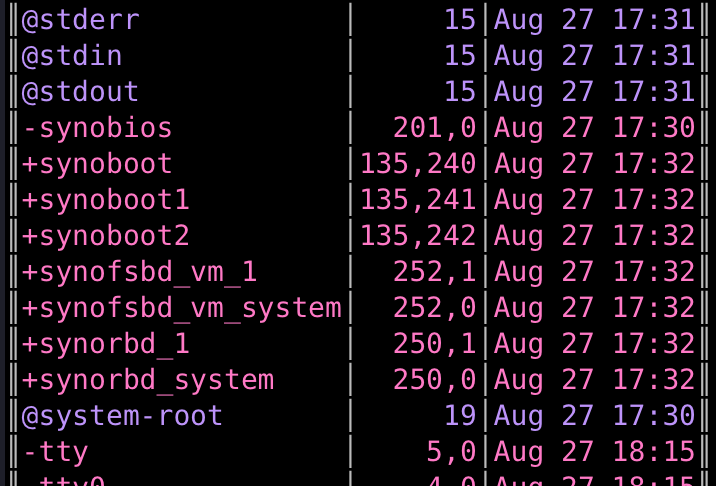

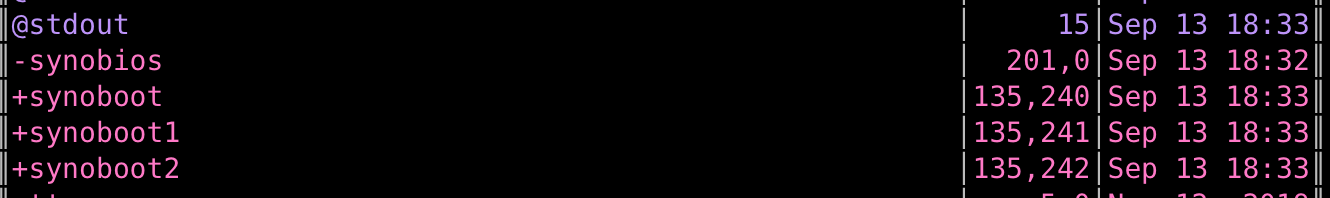

The main takeaway from the log was the failed to mount boot device /dev/synoboot2 line. While it was odd that it was unable to mount the said boot partition, checking the actual file structure of the /dev path, explained why this was happening.

Side-by-side comparison of the /dev path between the RS2423+ (left) and DS918+ (right)

The entire "synoboot" stack was missing. This was unfortunate as this means that a simple reboot would probably end up with a "blue light" blinking and the NAS not being able to boot up at all.

Contacting the support via a ticking platform and writing up all the information, the whole process began.

: The RMA process

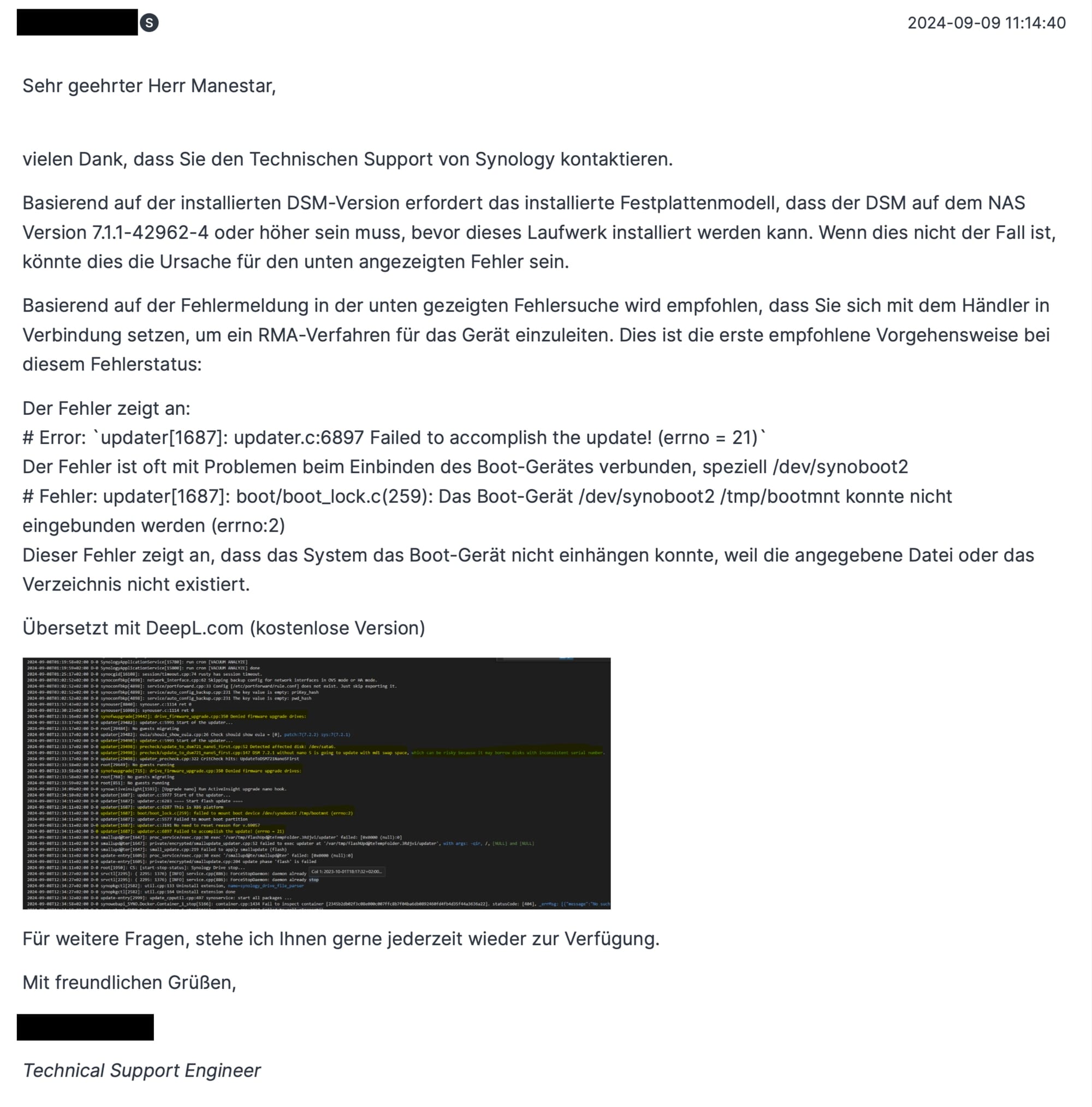

The first responder was within 24 hours since the ticket was open. It was obvious that the Synology support line has started to implement AI assistance in their process, but this was not the case with this particular ticket just yet, but rather a support engineer using a publicly accessible platform.

It was also clear that the case was not read carefully, so after pointing out the actual problem again, the communication forwards went in the right direction.

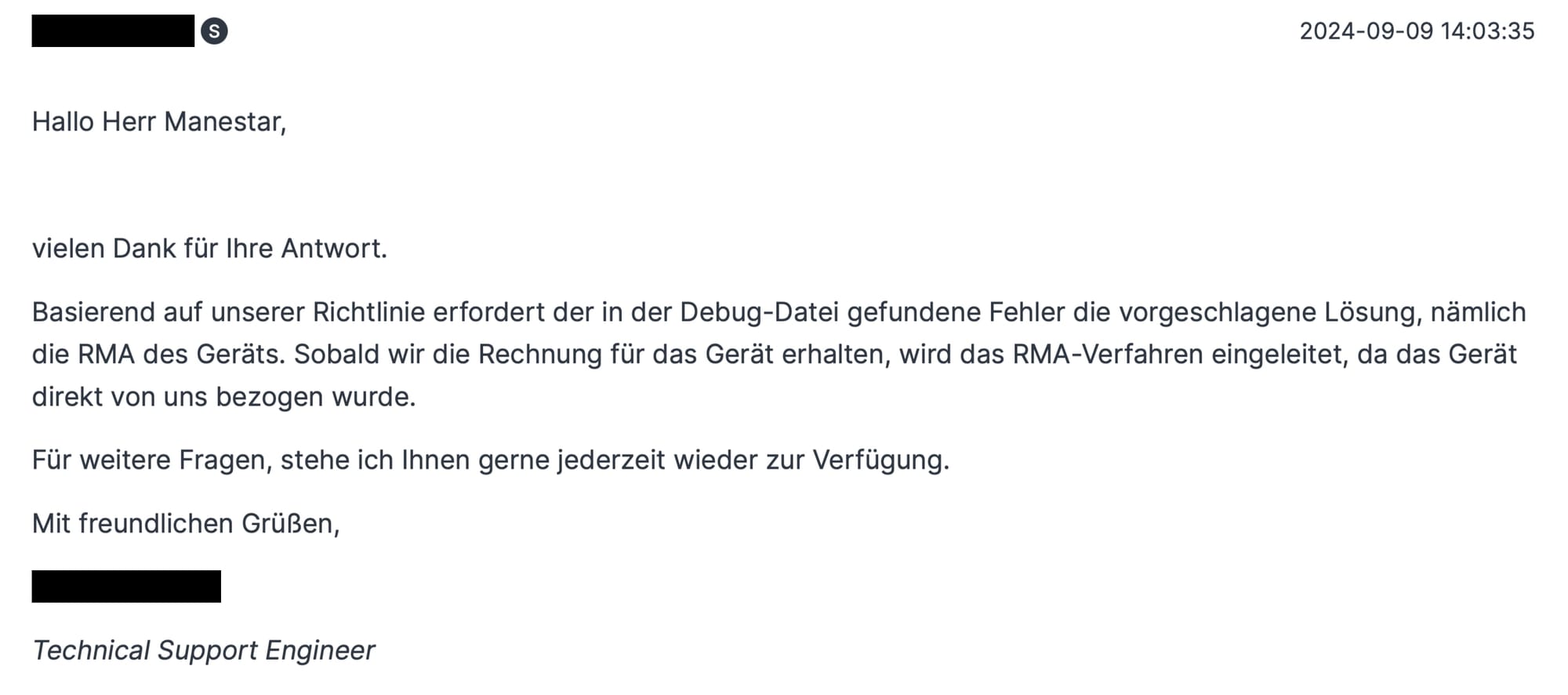

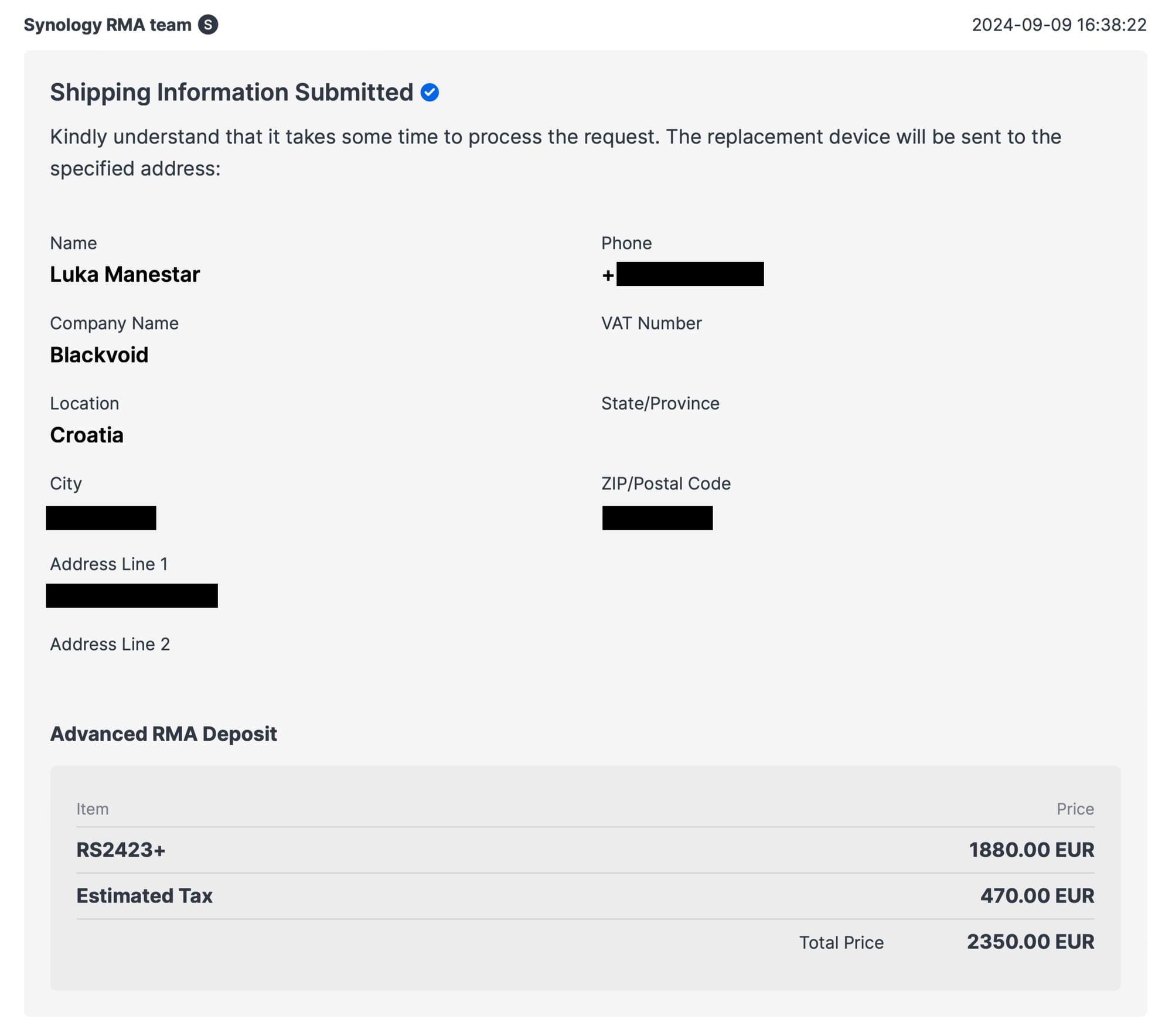

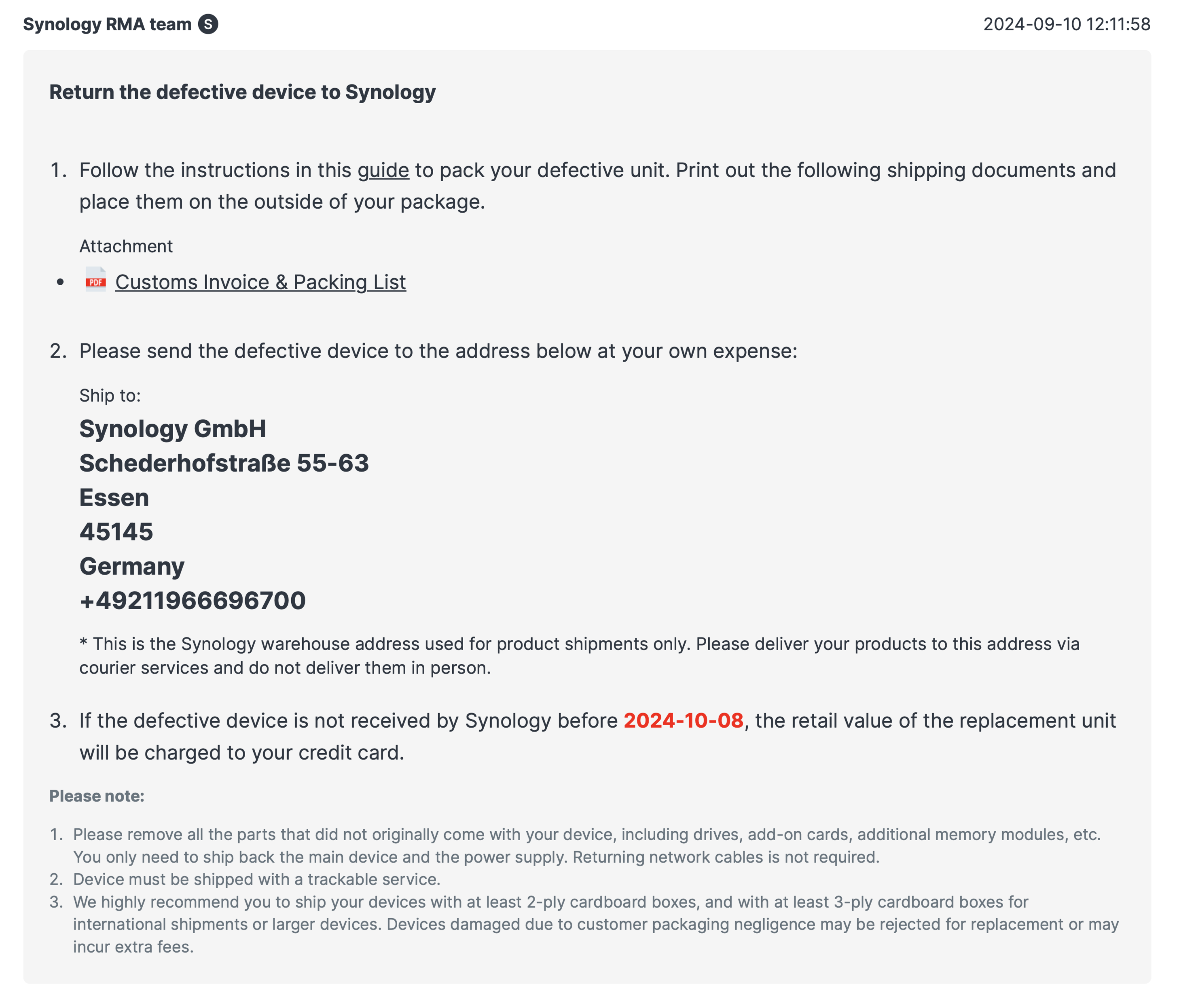

Without delay, the support clearly stated that this type of error qualifies as an RMA, and the process was initiated immediately. What followed was a proof of purchase (invoice) and the Synology RMA team took over the support conversation from there. This was a nice seamless integration of the whole process as the entire communication is part of an ongoing ticket.

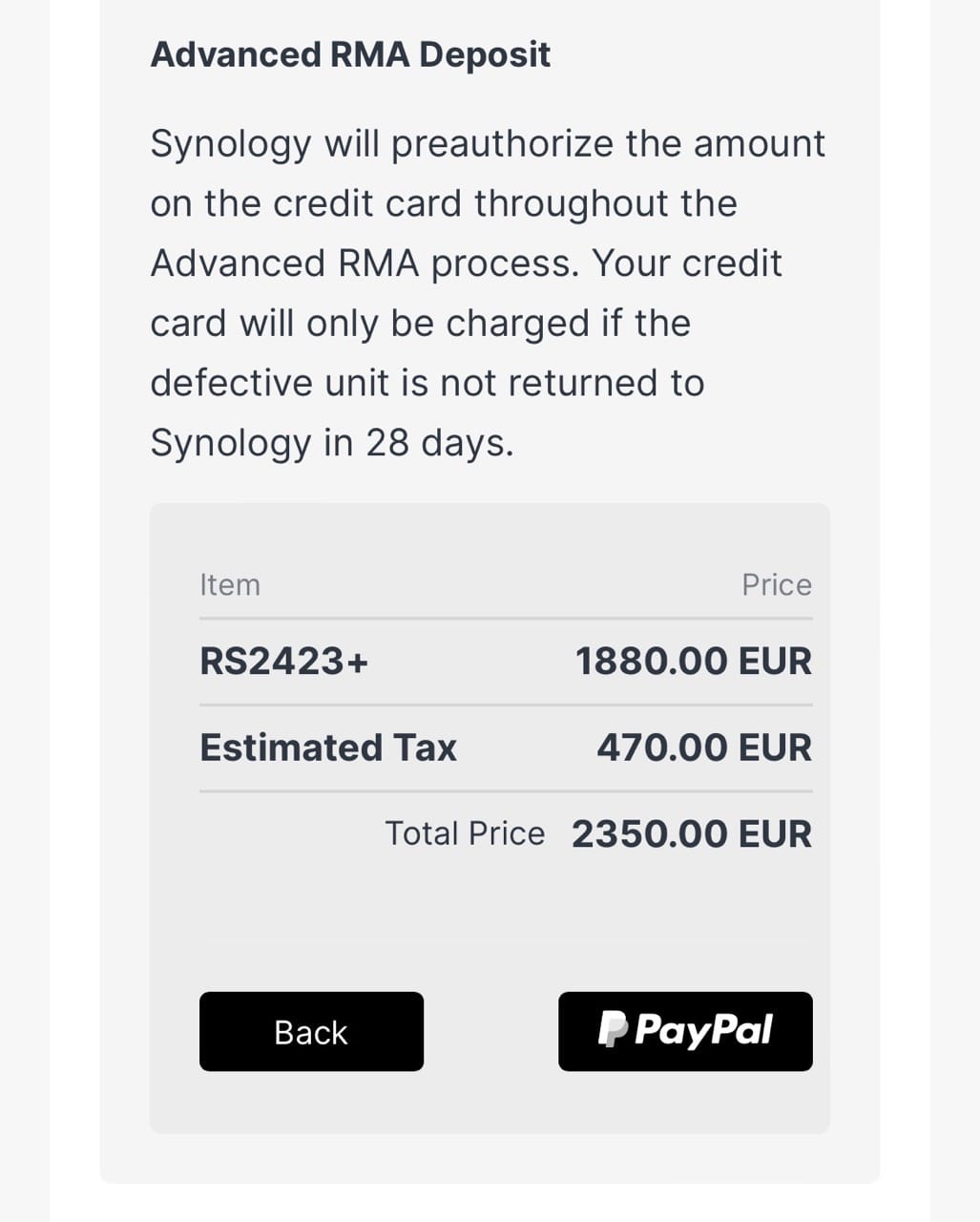

Up until that point, all was going well, but if there is a single issue that I have with this whole process is that there is a single payment method to make a deposit, and that is PayPal. The wizard offers a single payment button bound with PayPal, so no other options are available.

The reason for this is of course that the faulty device needs to be returned within 28 days or Synology will charge the PayPal account for the amount of the device in question.

The RMA deposit can only be done via PayPal

It only took a few hours since the start of the RMA process until the item was ready to be shipped, so I can't blame them for forcing the PP method, because the most important thing was to get the replacement unit as soon as possible.

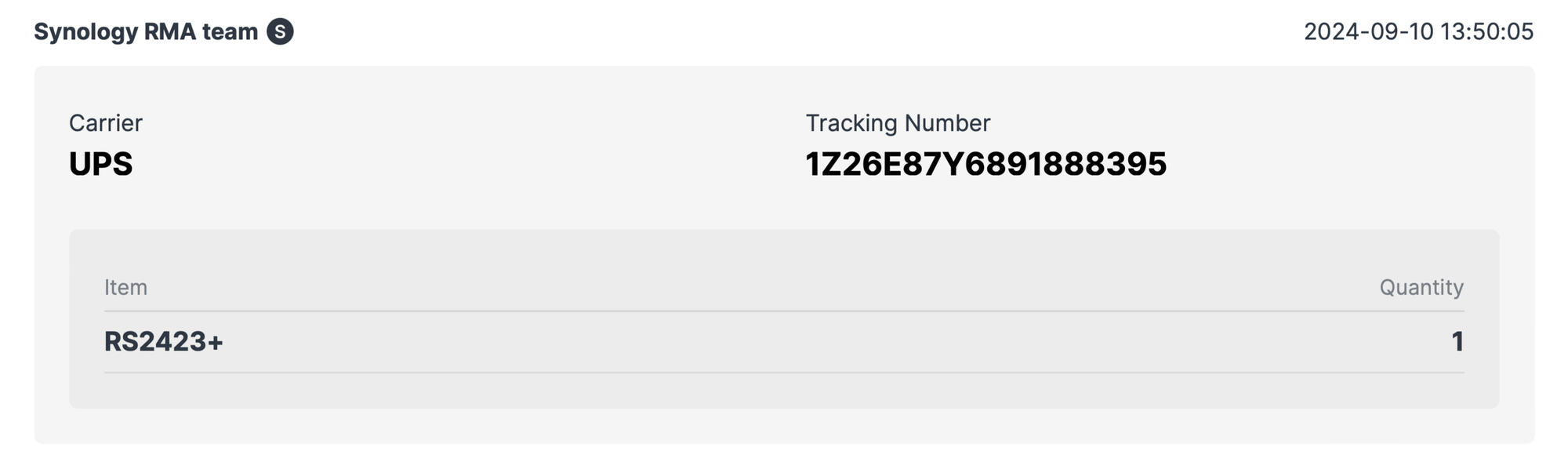

The following day the RMA team confirmed the deposit and the UPS logistics picked up the new NAS. Three days later, the unit arrived.

: The resolution

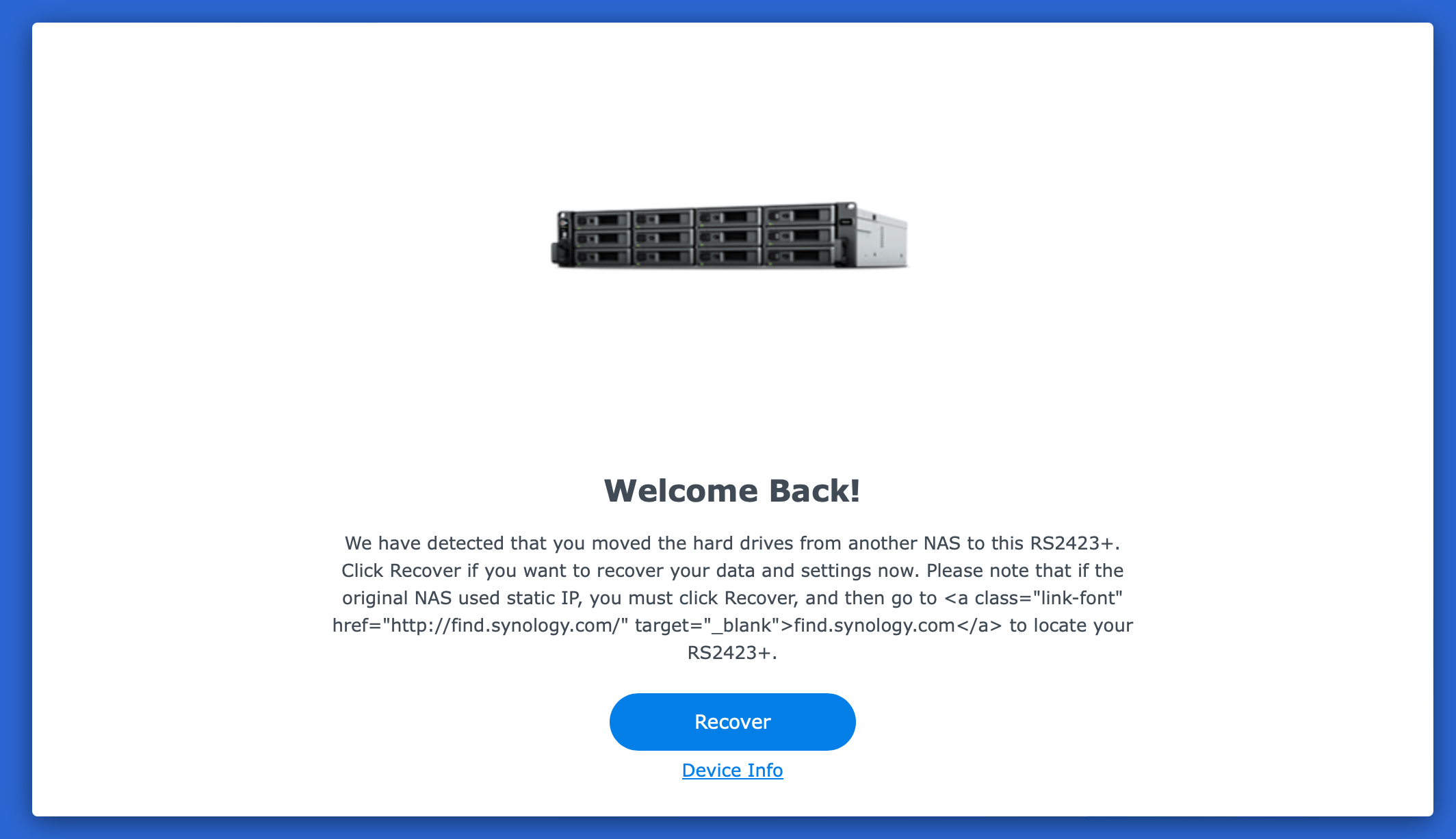

What followed was a simple disk migration process explained in Synology's KB article. In short, the steps include:

- Setting up and updating the new unit on the same DSM version as the defective one. In this case that meant installing DSM 7.2.1-UP1. Because the unit came with DSM 7.1 (stock), this was not the problem

- Once the update has been completed, migrate the disks from one device to the other

- Follow the recovery process until it is completed

While I had the complete configuration exported including backup of critical systems and apps on hand, none of that was needed. The recovery process simply detected the drives were moved from one unit to the next, and required to align the settings with the new hardware model. After another update ring reaching 100%, the device rebooted and all was well.

Not a single file, setting, or configuration was wrong. The IP address was also recovered, including users, LDAP settings, etc. Docker stacks and containers booted with no issues at all. As if nothing happened.

From a user perspective, this was exactly how the whole process was supposed to go. Opening the ticket, activating the RMA, migrating the drives, and boom, done.

Total downtime from start to finish was 14 min long including update to 7.2.1-UP5 and finally 7.2.2.

So, is Synology EW+ worth it? I would say, yes without a doubt. Especially with the more expensive or complex setup, it is good to know that for the following 4-5 years (depending on the model), you are entitled to an advanced RMA process. The whole proceedings, apart from the initial AI response, were quick, precise, and professional, and on top of it all, what arrived was a brand new, not refurbished device.

It might seem like a steep price to pay for something that might never be needed, like the RMA, but sometimes having multiple backups will not save you from the fact that the unit itself is faulty. This is also a factor that needs to be taken into consideration, as it can affect your entire setup. Having a 30, 40, or 100TB setup not working because of a "simple" error like this is a reason enough to invest in an extended warranty plus service. If you are in North America or Europe, be sure to activate it within 90 days of the purchase, you can thank yourself later.