Synology SA3400 - Part 3 - virtualization

SA3400 series articles:

Intro - review and testing

Part 1 - Installation and configuration

Part 2 - Backup and restore

Part 3 - Virtualization

Part 4 - Multimedia

So far, we've seen how to set up the SA3400 NAS, configure it, and how it behaves and works with Synology backup tools, and even stepped into virtualization in part.

With this article, I would like to continue in that tone and see how the NAS specifically behaves when it works with a dedicated hypervisor host or on the other hand, uses the layer 2 hypervisor as part of its package offer.

In the tests that follow, we'll look at how we can configure the SA3400 to be data storage via the iSCSI protocol for virtual machines running HP ProLiant DL360 Gen8, how it will work when certain issues occur, and which disk array will give better results.

I will also do disk tests on the virtual machines themselves and see just how fast it will all work together over a 10G connection.

VMM (Synology Virtual Machine Manager)

For starters, I’ll start with the Synology platform, Virtual Machine Manager (VMM), followed by VMWare ESXi.

VM creation and 10G client configuration

At the moment, I will not deal with explaining the initials of the installation and configuration of the VMM package, but I will immediately focus on matching the Windows 10 VM (one for each array) and the configuration of the 10G adapter.

After the Windows 10 OS installation is set up within the VMM, the installation of the specific VM begins. In this case, it will be one virtual machine for each disk array.

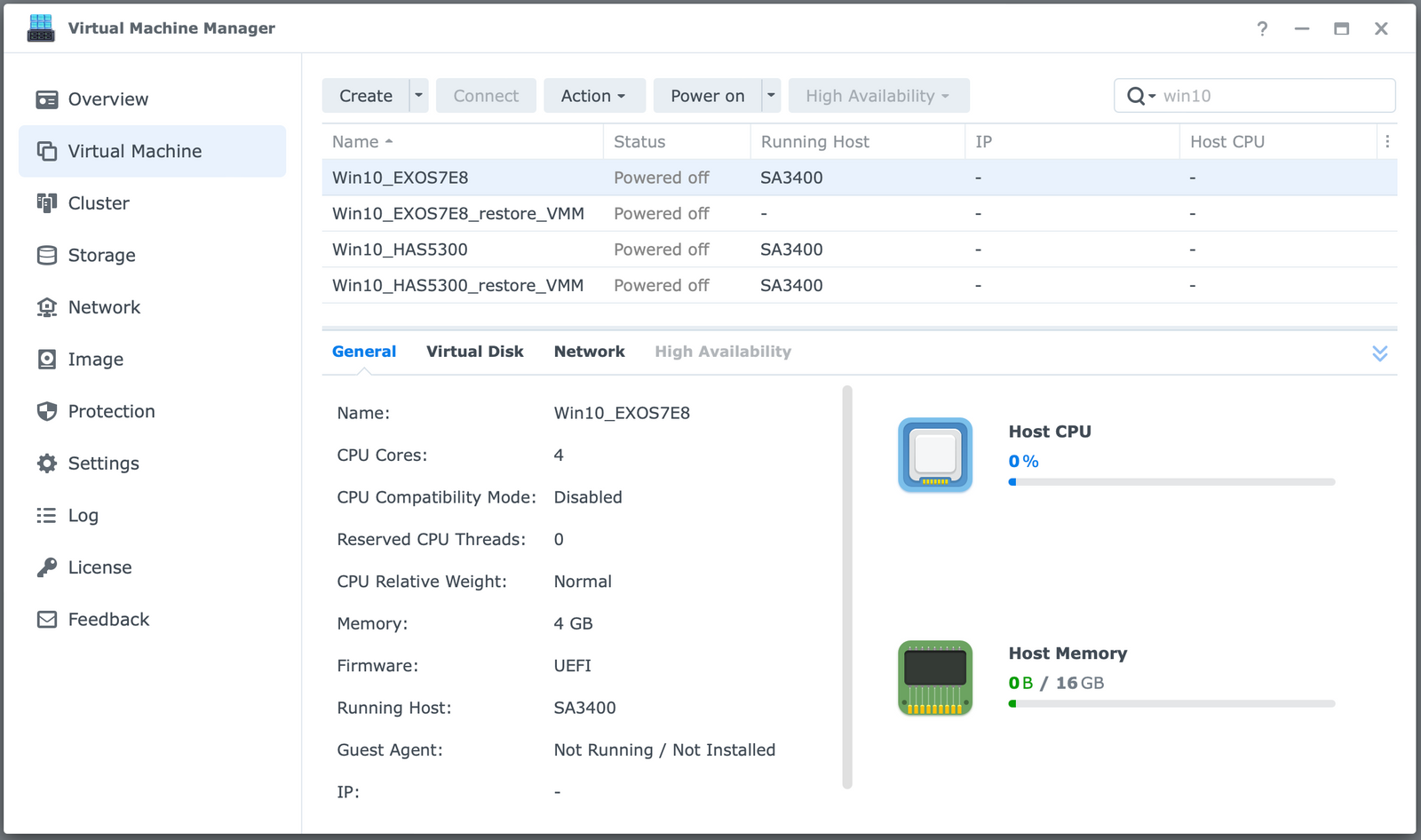

The previous image shows 2 computers (and their duplicates that can be ignored for now) as well as the CPU and RAM configuration of the same.

Computers are configured with the following specifications:

CPU: 4 vCPU

RAM: 4GB

HDD: 50GB

NET: 10G

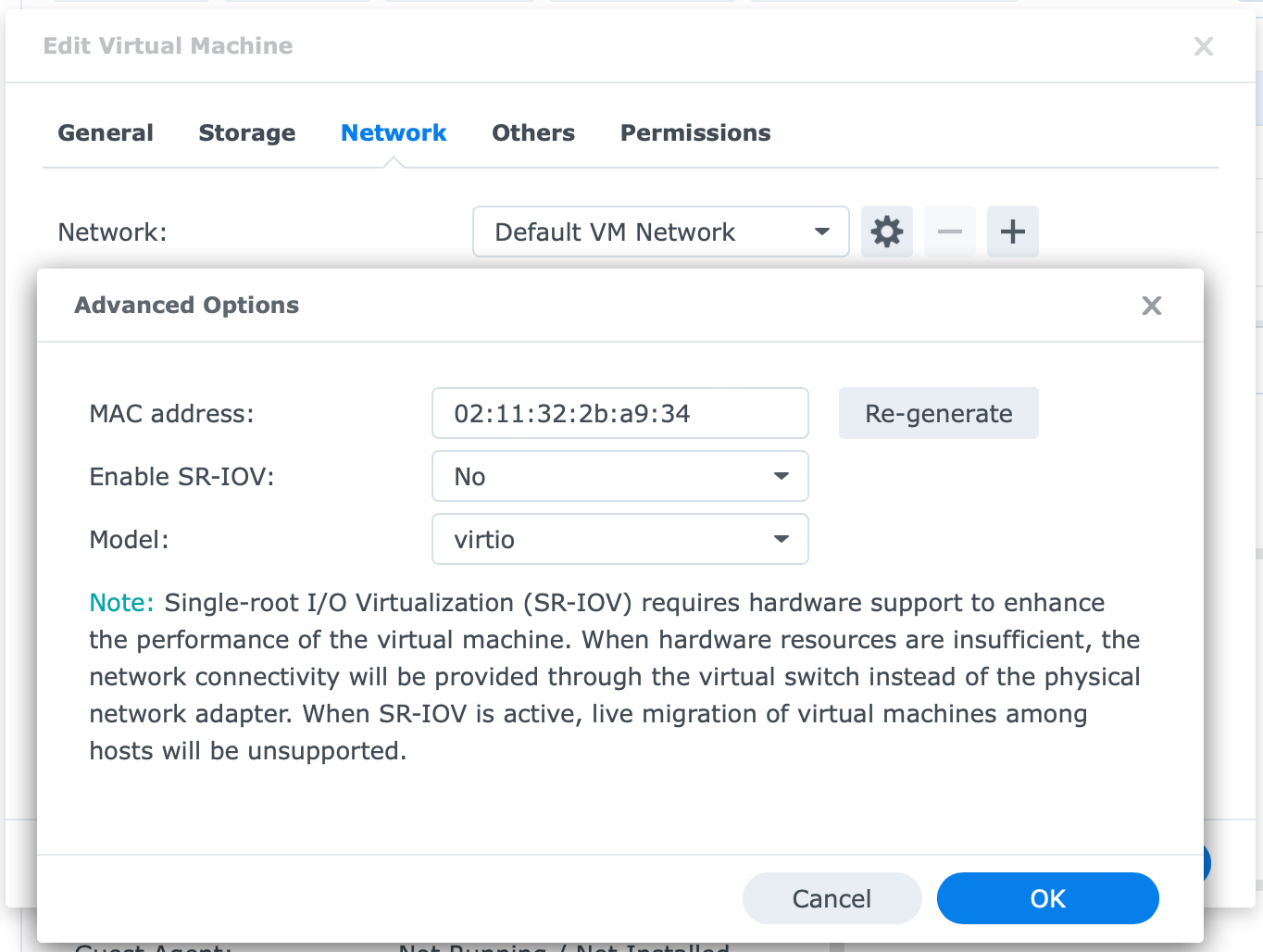

What is perhaps most important in case you want to have a 10G network connection within your virtual machine, is the configuration of the required driver.

In the case of a VMM, it is configured as follows.

After creating a virtual machine, within the Network tab, click the Advanced options icon ("wheel") and select virtio from the Model menu.

This will give you a 10G network adapter inside your virtual machine.

The installation media in my tests was the official Windows 10 OS ISO file located on the SA3400 NAS.

The installation took an astonishingly long time, regardless of the specific array in which the virtual machine was located, while the CPU utilization of the NAS itself averaged only 7%.

It is strange that the installation seemed to have "pauses" at times, although nothing on the side of the device indicated any load.

Installation tests and boot up

For this reason, the passing times of installation in both arrays were equally slow, because I expected that, as in the ESXi environment, it would be done within 7-8 minutes.

In the case of the Synology VMM hypervisor, in particular, it was not so fast.

Win10 (4vCPU/4GB) HAS5300 (RAID6) VM install 19m

Win10 (4vCPU/4GB) EXOS7N8 (RAID6) VM install 20m

Both installations behaved identically, indicating that the disk arrays were running at the same speed. Unfortunately, as can be seen, the installation time is almost three times higher than in the case of the ESXi hypervisor.

It should be noted that they passed without any problems, but slowly.

As for starting the virtual machine once it is configured, the situation is drastically better. They load equally quickly from both disk arrays with almost negligible differences.

Win10 (4vCPU/4GB) HAS5300 (RAID6) VM boot 18,29 sec

Win10 (4vCPU/4GB) EXOS7N8 (RAID6) VM boot 21,04 sec

The computers are generally ready within 20 seconds of ignition, which is completely acceptable. I will show the network communication speeds at the end, after the ESXi hypervisor segment.

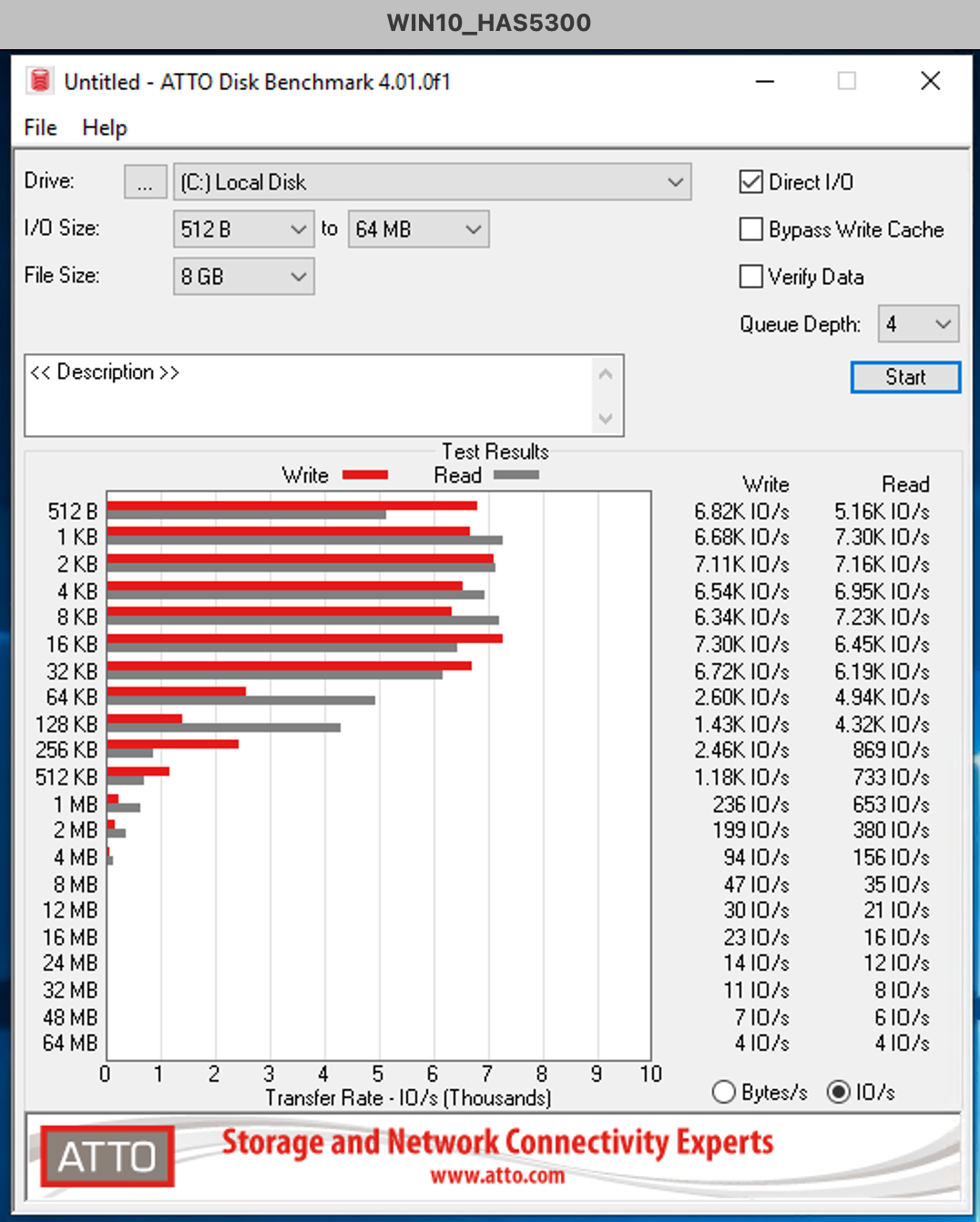

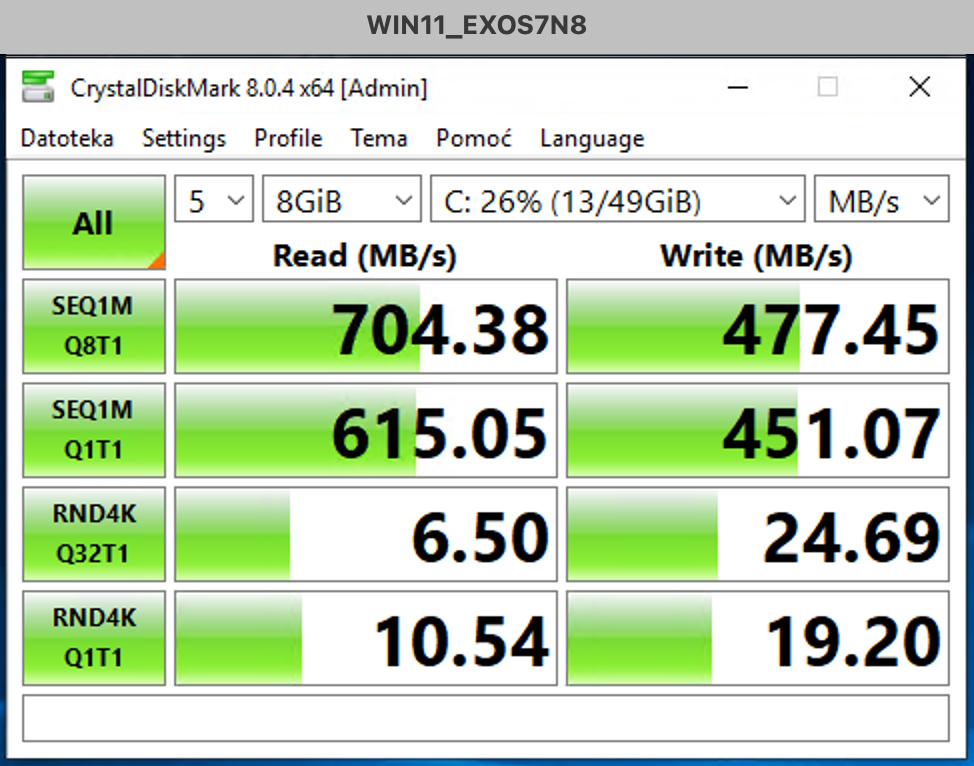

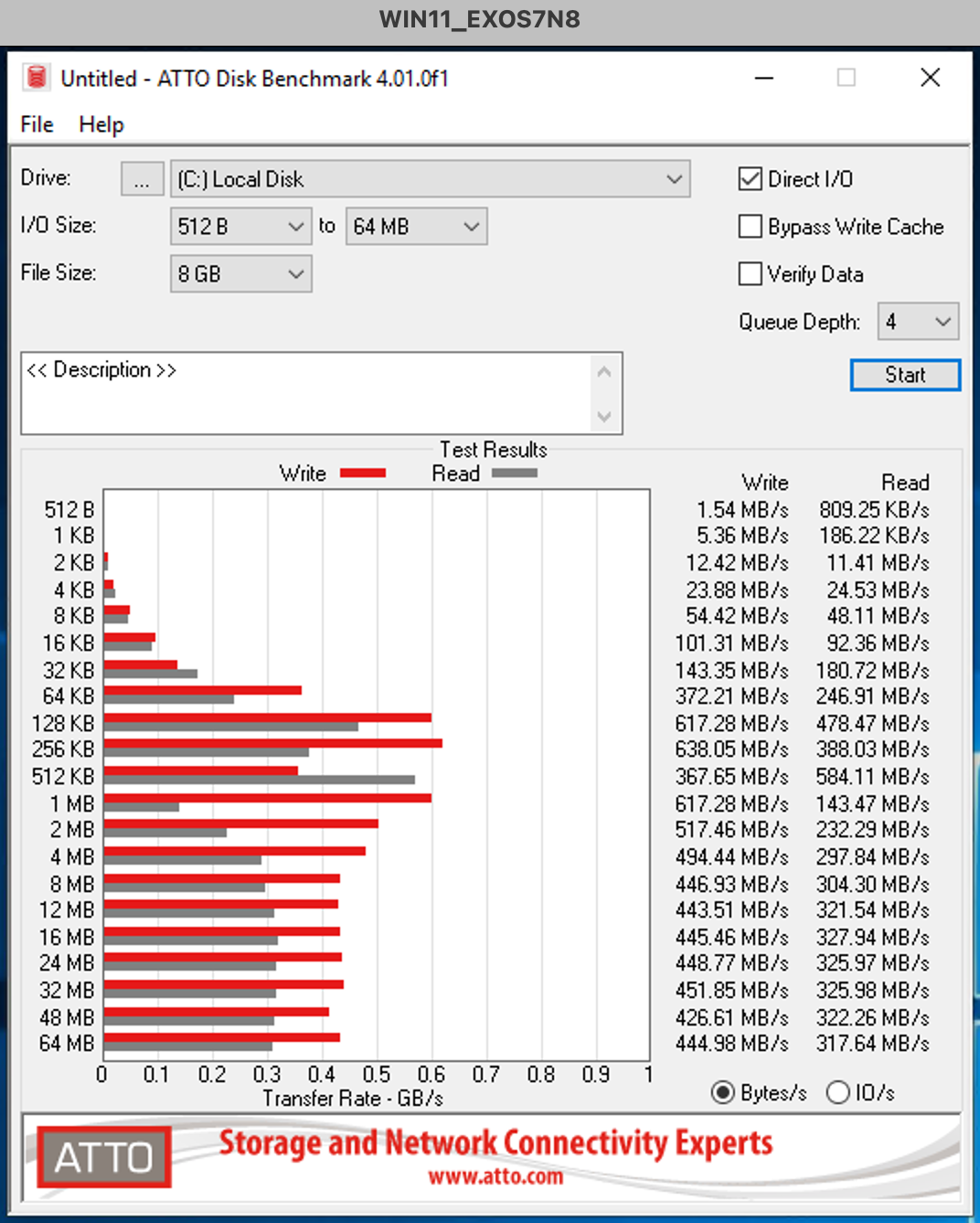

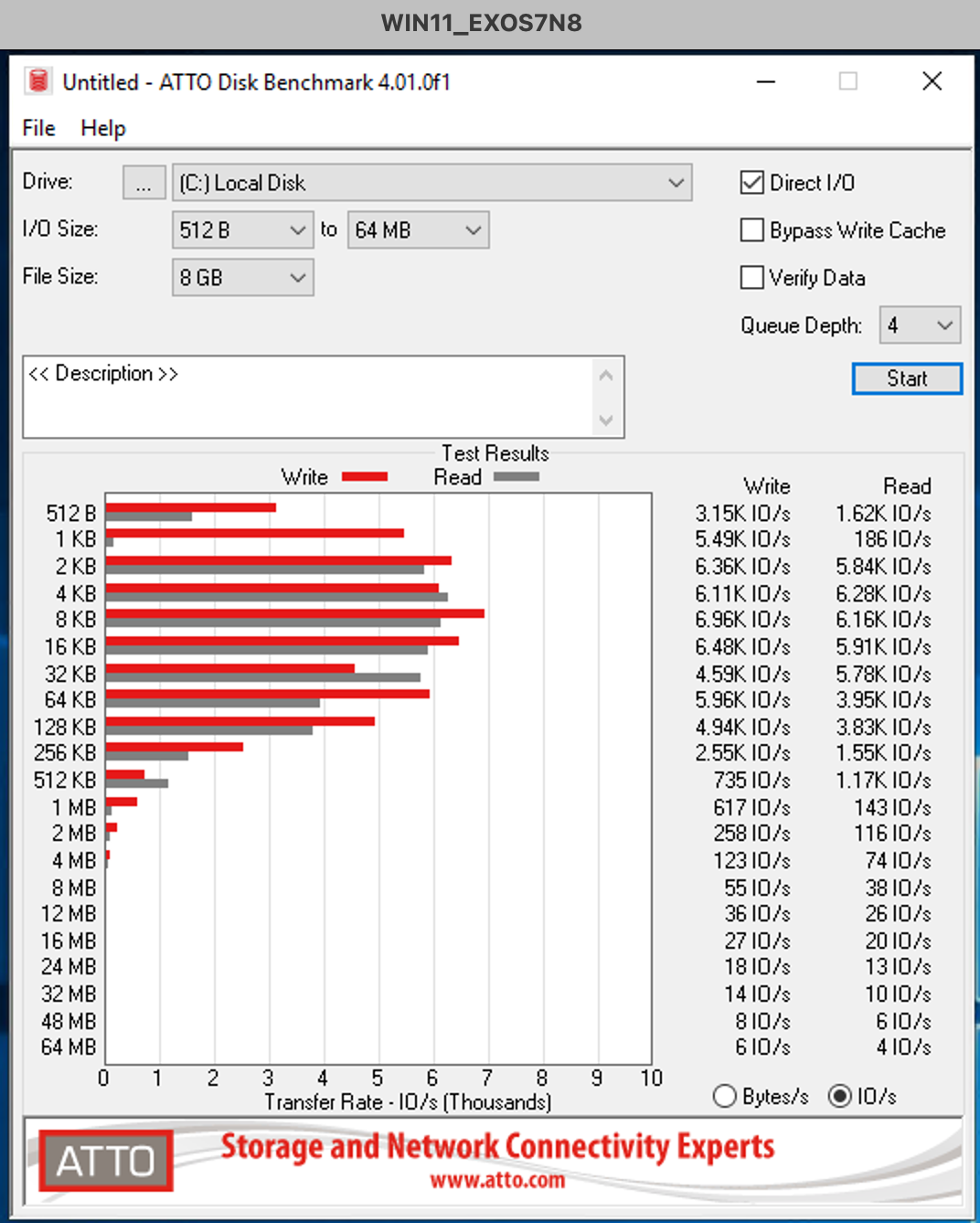

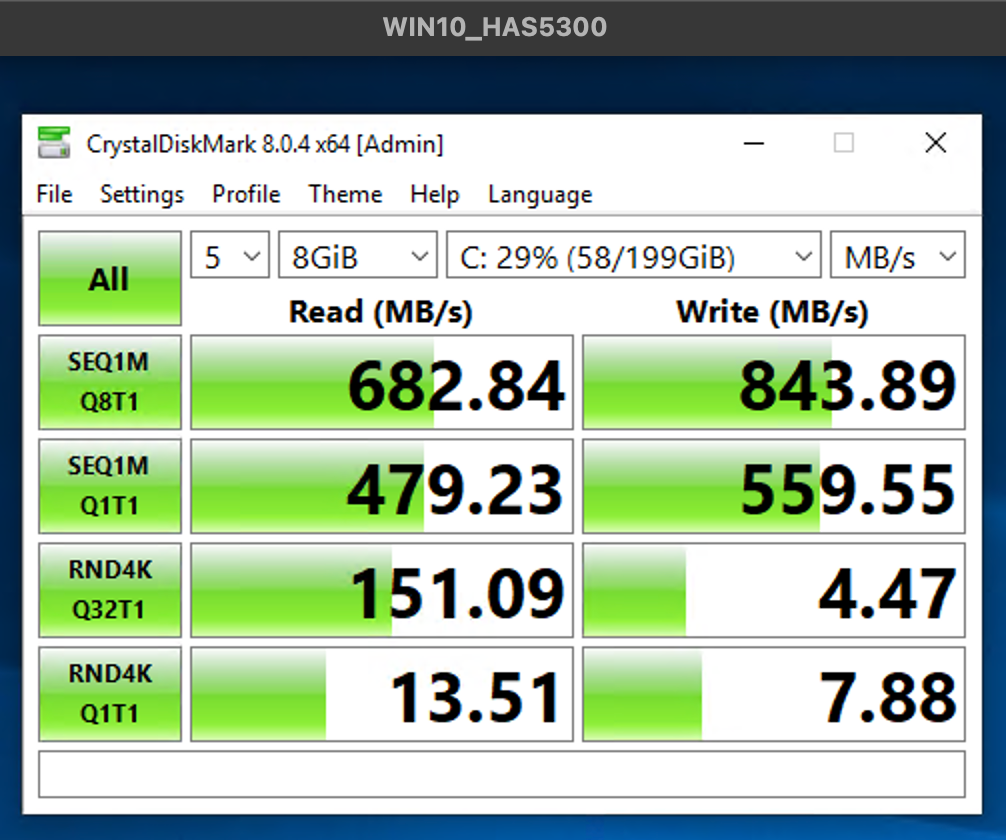

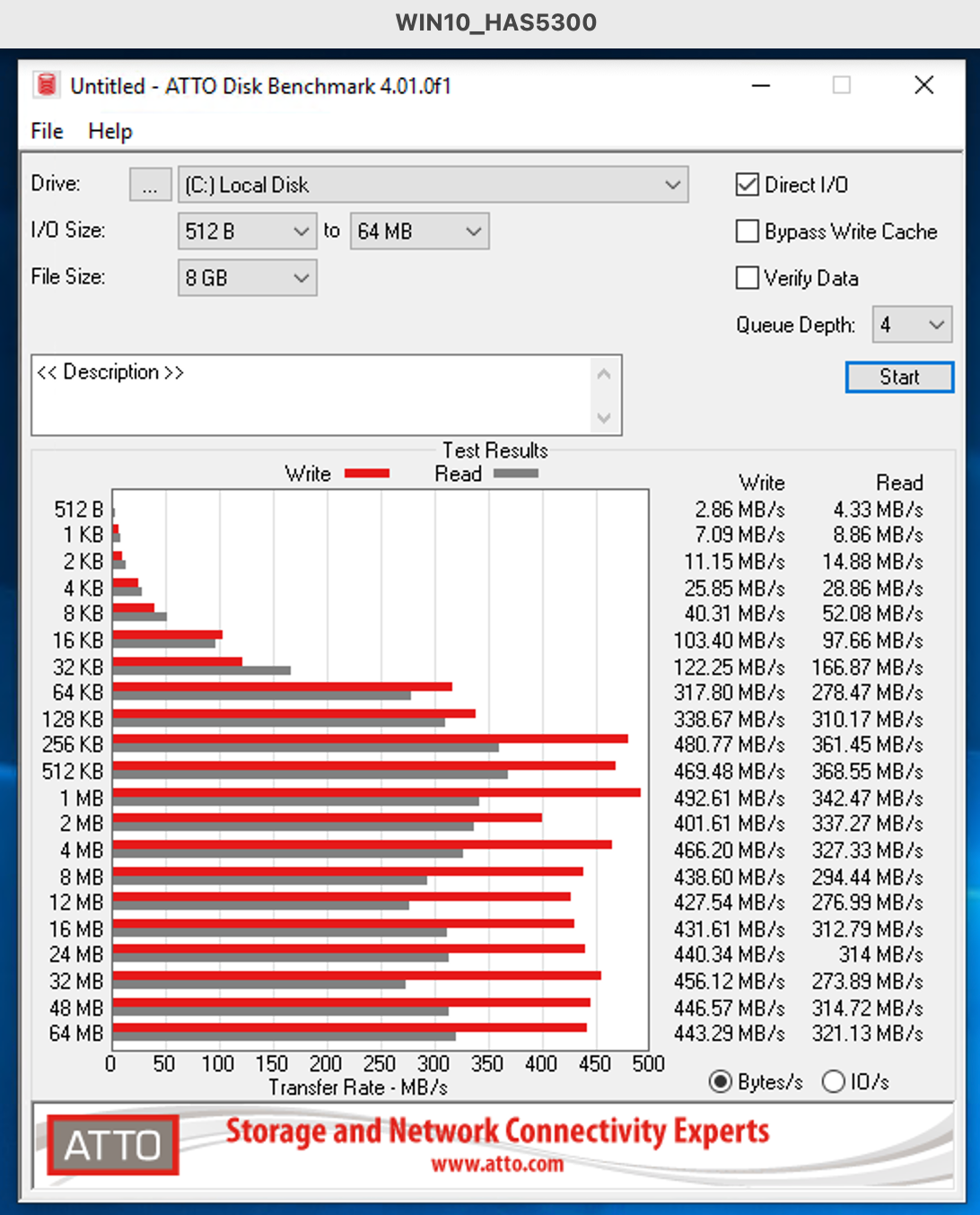

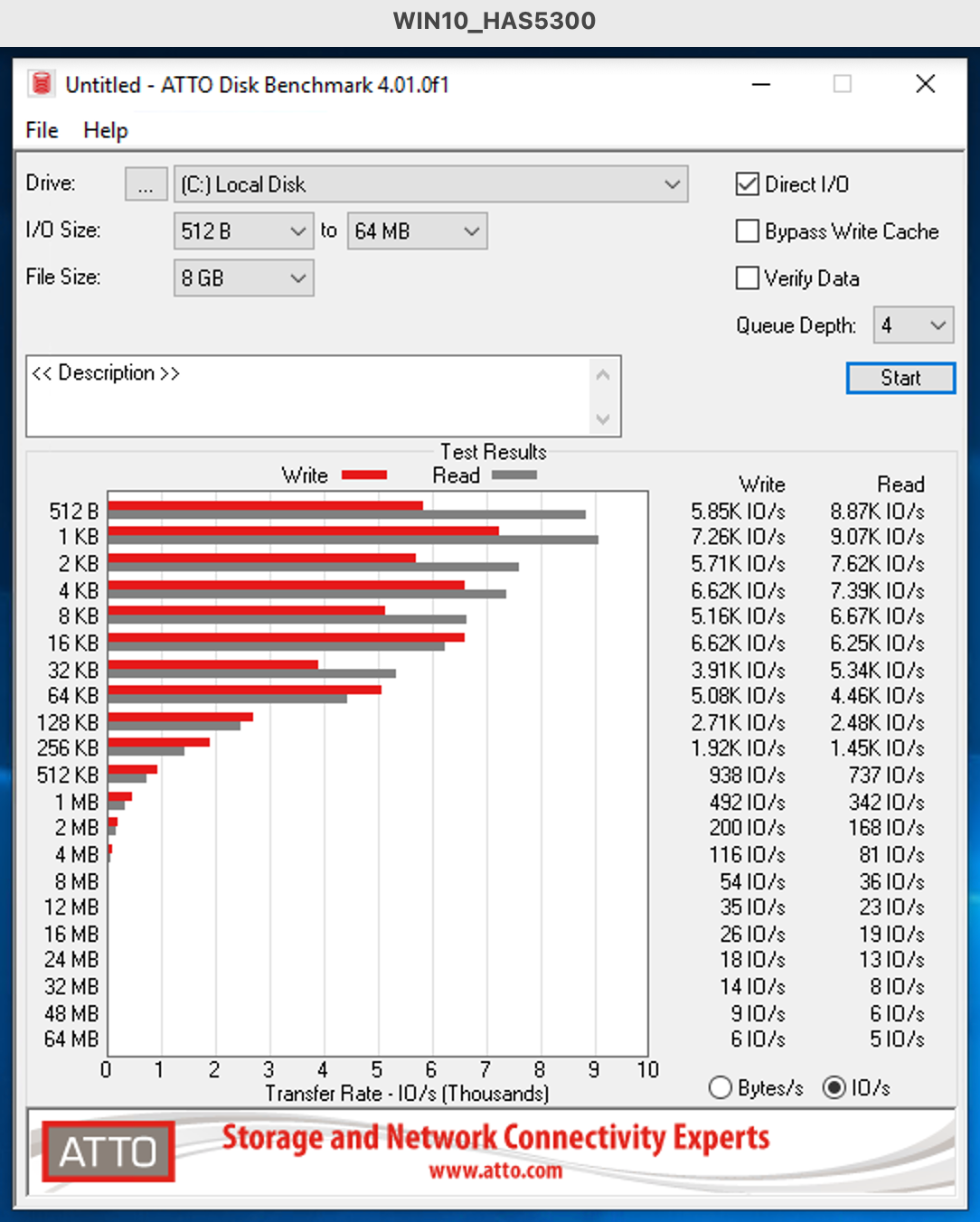

Crystal and ATTO disk benchmarks (Synology VMM)

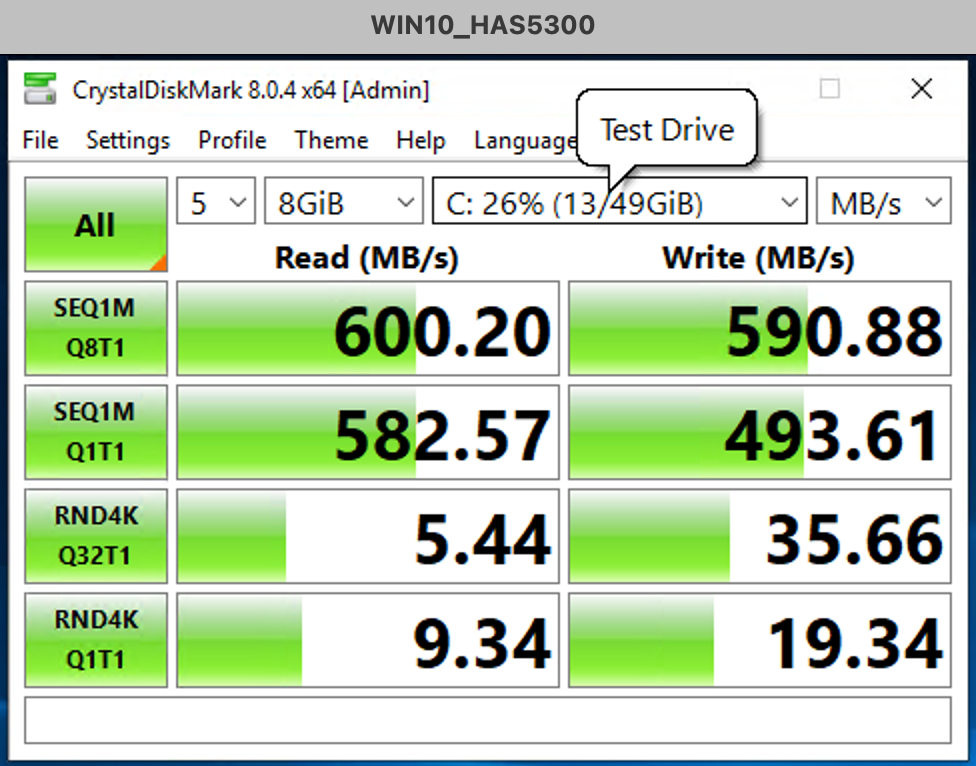

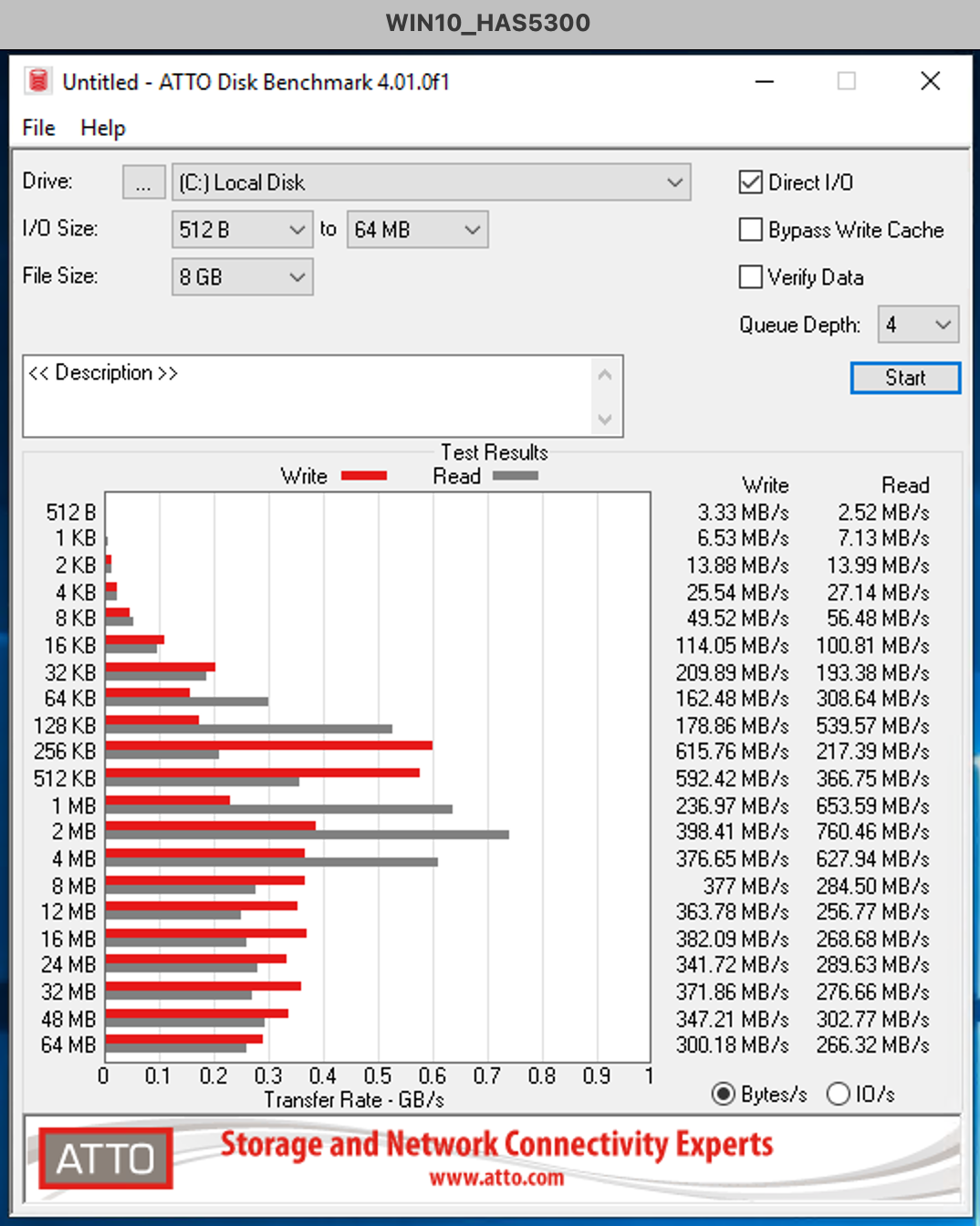

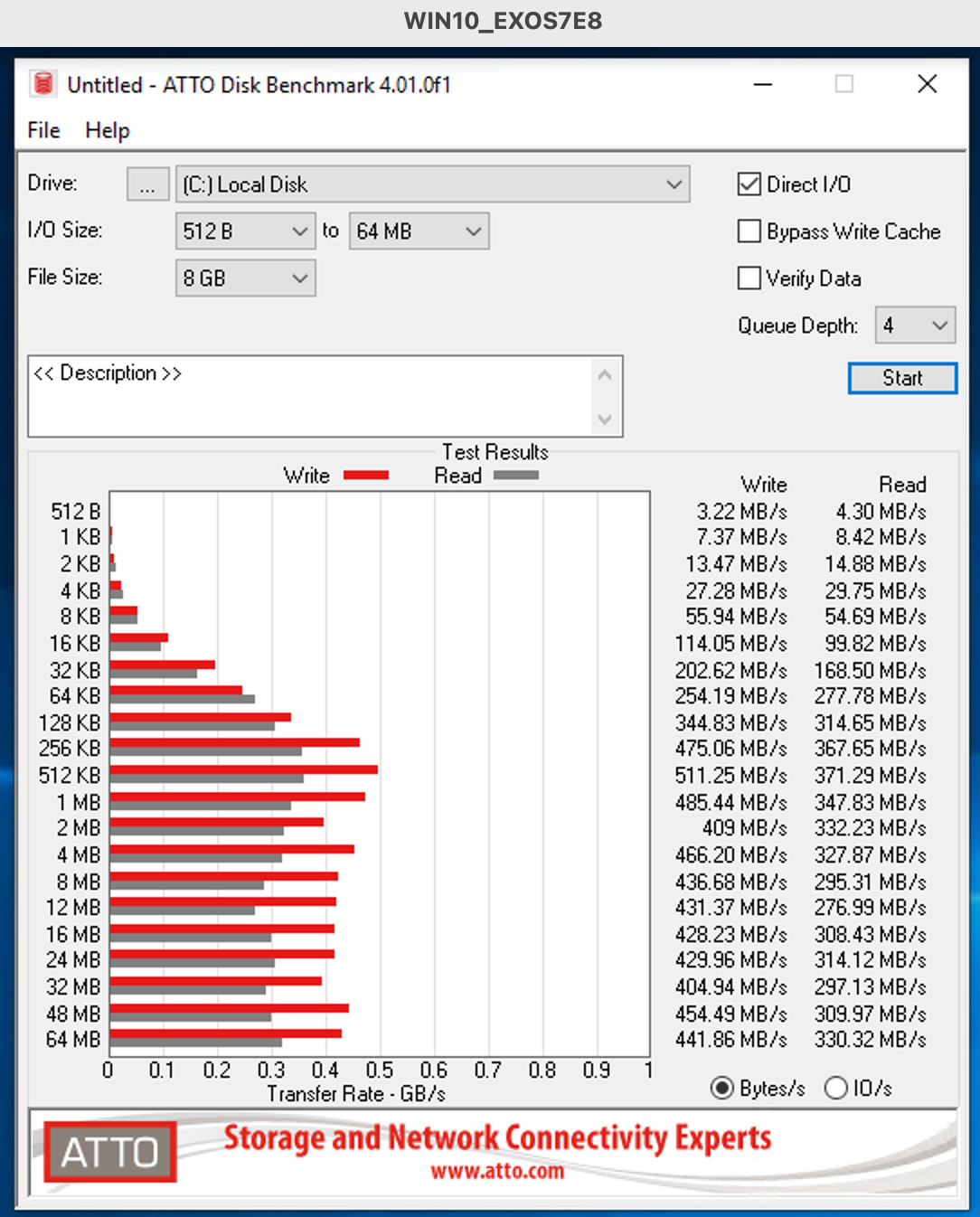

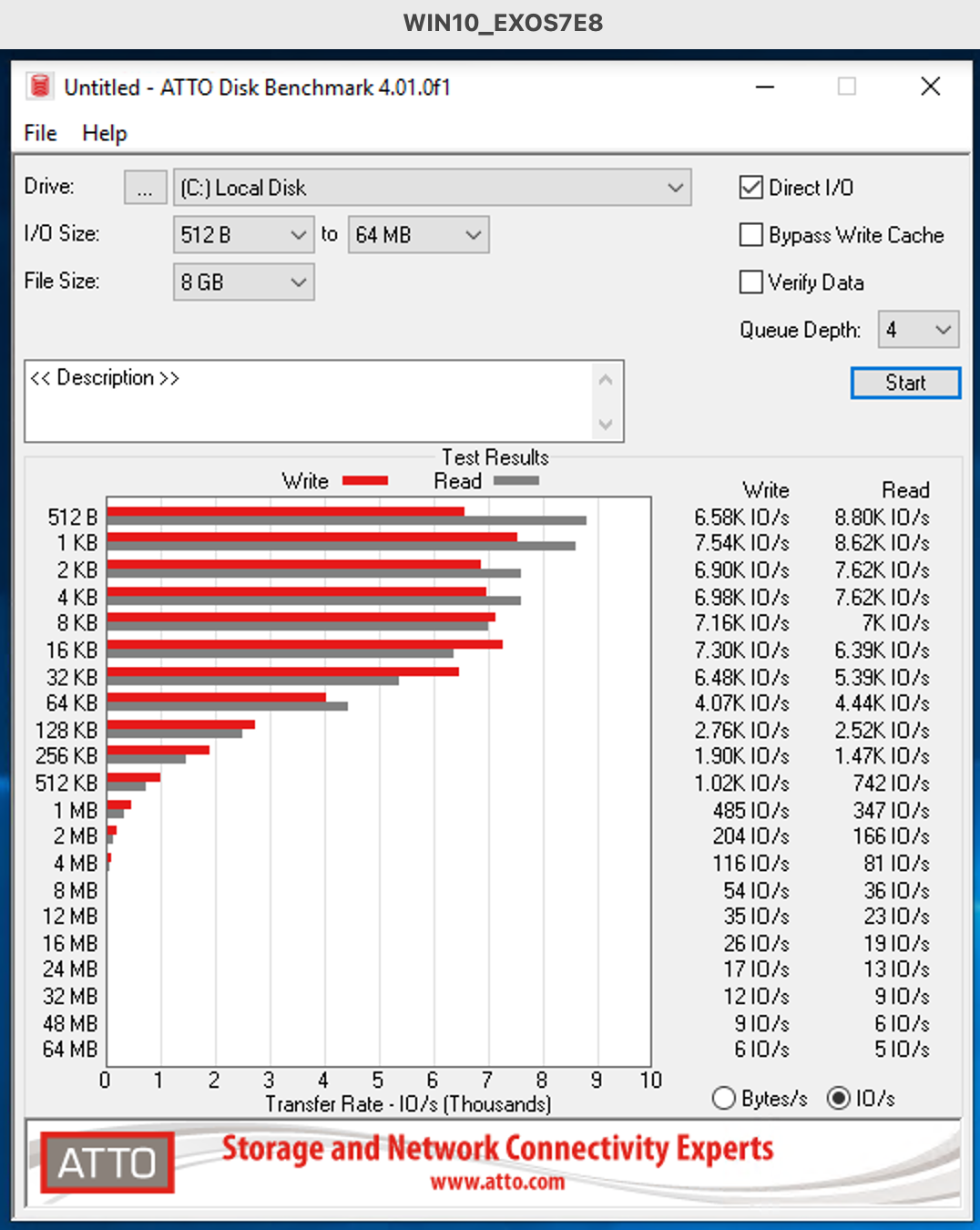

Something that might still be interesting in this particular environment are virtual disk tests. CrystalDiskMark, as well as ATTO Disk Benchmark, were used for this.

Below are the results of a Windows 10 computer that was on the Synology HAS 5300 raid array.

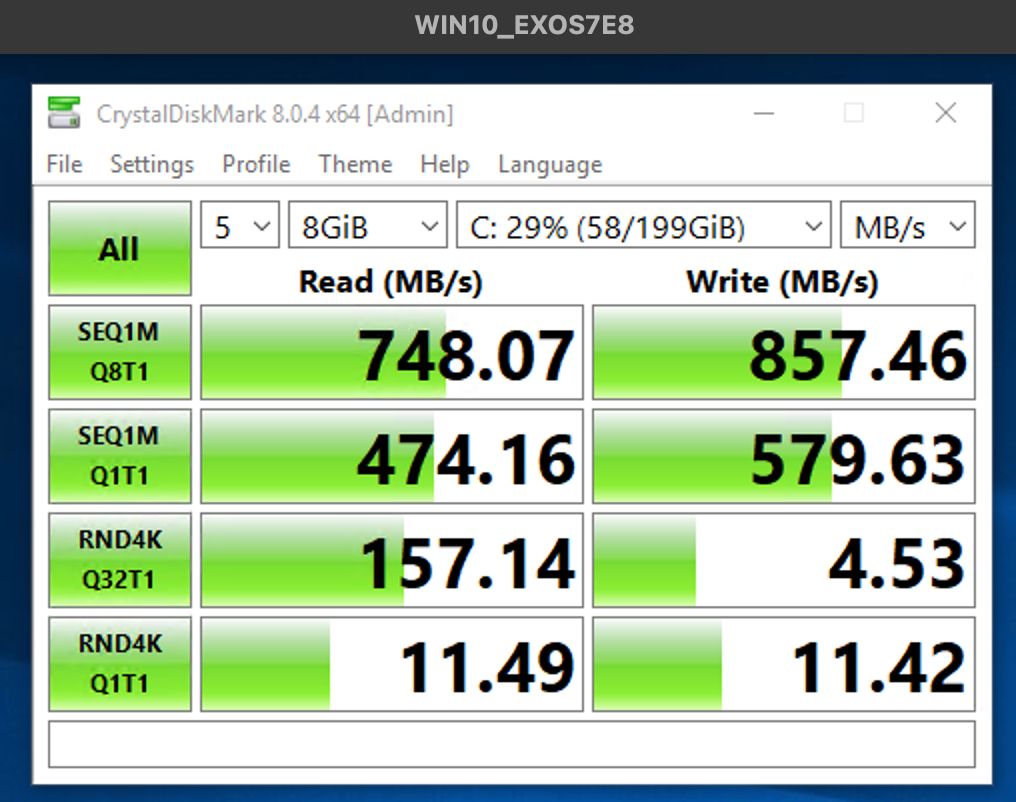

These are the same tests on the same computer located on the Seagate Exos 7N8 raid array.

The reason I used the two tools is to see if they will show the same or approximate results. In the end, that was the case. Virtual computers on Synology disks generally had better writing results, while Seagate disks were better at reading data. This was true for both small and large files.

Now let's see the ESXi hypervisor side of things.

ESXi

As a comparison to the Synology VMM hypervisor, I opted for the VMWare ESXi 7. Still, VMware is the leading entity when it comes to virtualization, so I wanted to see how virtual machines behave on that platform.

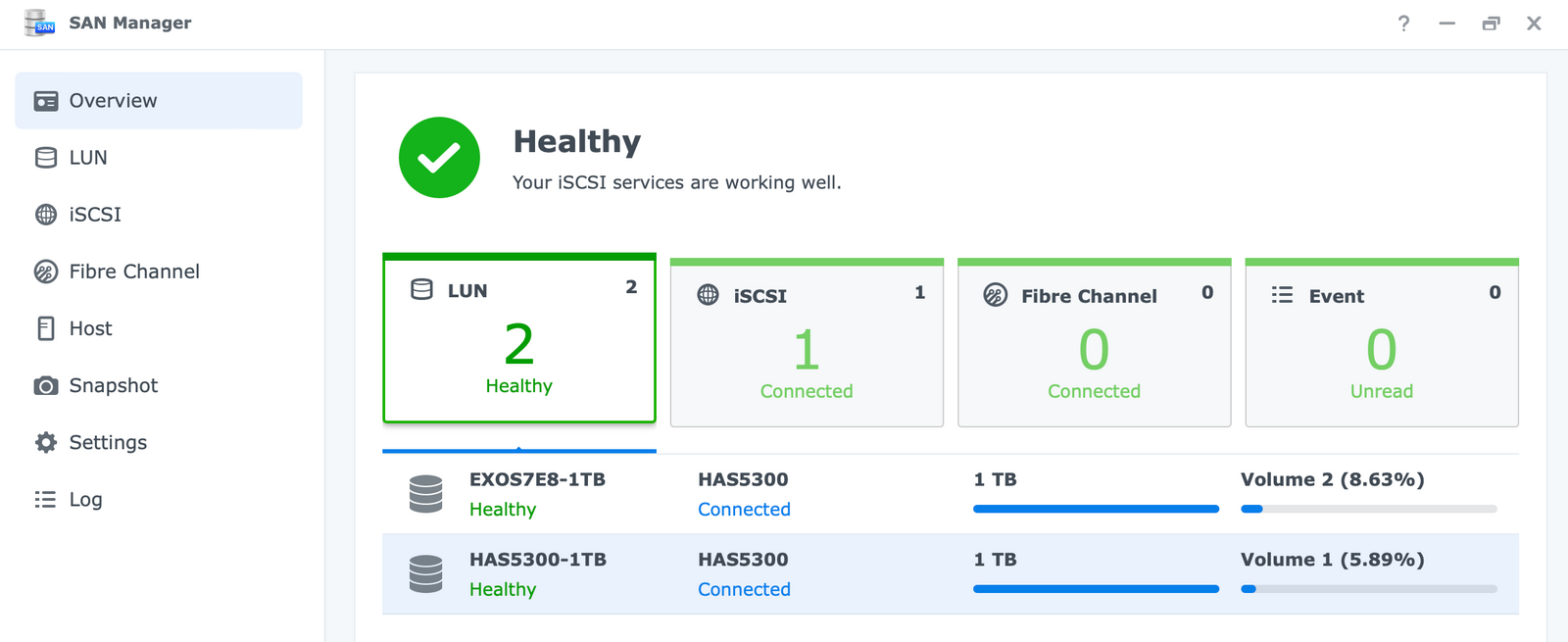

iSCSI datastore configuration (SAN Manager)

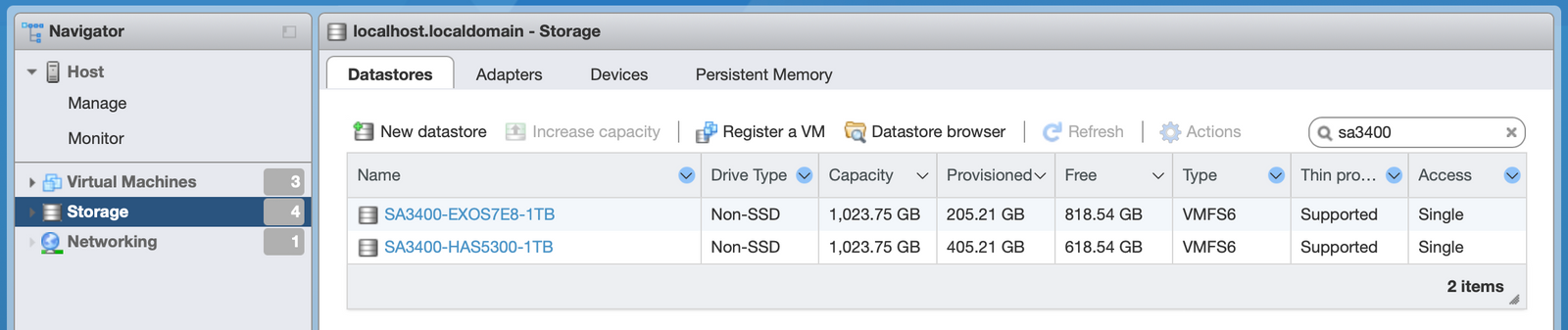

Unlike VMM, ESXi is a pure hypervisor that really doesn’t have its own disk space where virtual machines are located for these tests. I wanted to see how a Windows 10 virtual machine would behave if it was still on SA3400 drives via iSCSI communication over a 10G network.

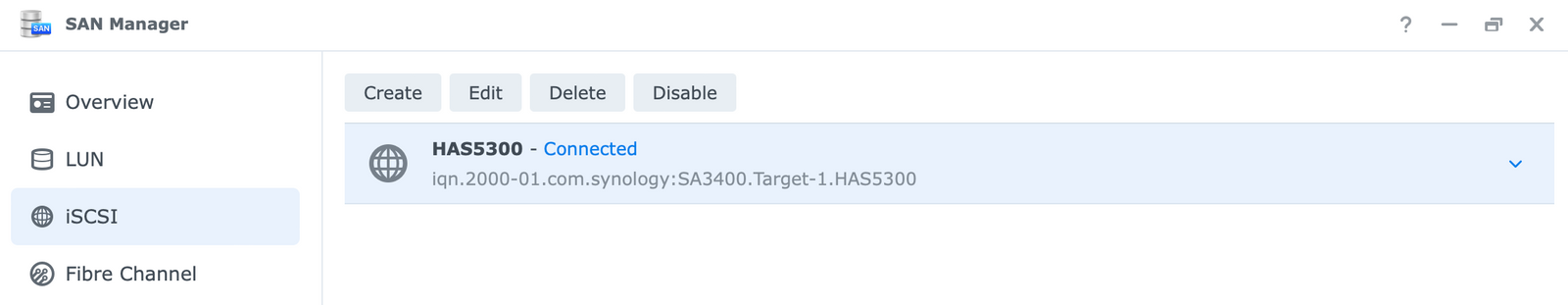

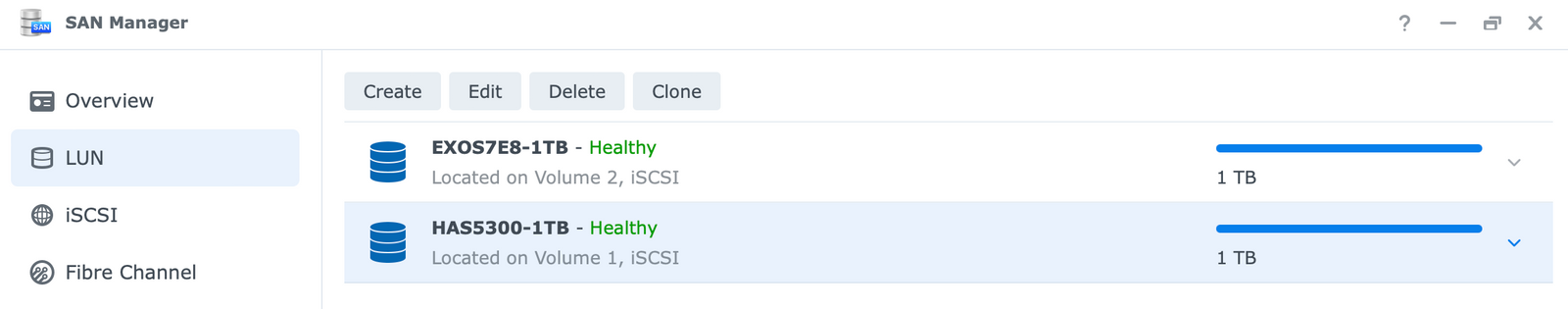

For this scenario, you first need to configure the iSCSI target and LUNs to which the ESXi will then connect and display them as your datastores.

Although iSCSI support has been around for a very long time in the world of DSM, Synology has decided to separate iSCSI and Fiber Channel support into a separate module with the new version, so with the DSM 7 we have SAN Manager.

This shows Synology's tendency to enter the enterprise segment, but it is still quite far from the real SAN solutions of big players such as NetApp, IBM, and others.

In any case, after creating the iSCSI target and separate LUNs, each on one RAID array, they need to be connected within the ESXi hypervisor.

On the ESXi side after configuring the iSCSI adapter, the wizard simply connects the LUNs that will eventually be reported as available datastores in the hypervisor itself.

Installation tests and boot up

Unlike installations within the VMM, Windows 10 ran on virtual machines running ESXi in just 6-7min per computer (again almost at the same speed) regardless of the disk array they were on.

Booting the computer, on the other hand, was performed in the same way as inside the VMM, about 15-20 seconds.

So from this, it can be concluded that the difference in the hypervisor is obvious, but why the installation on VMM takes so long, I'm not sure.

Crystal and ATTO disk benchmarks (ESXi)

These are now comparative results from identical Windows 10 machines located on separate raid arrays.

From this, it can be seen that the tests are faster on the ESXi platform and generally slightly better on a virtual machine located on the EXOS array.

Windows 10 network test on separate hypervisors

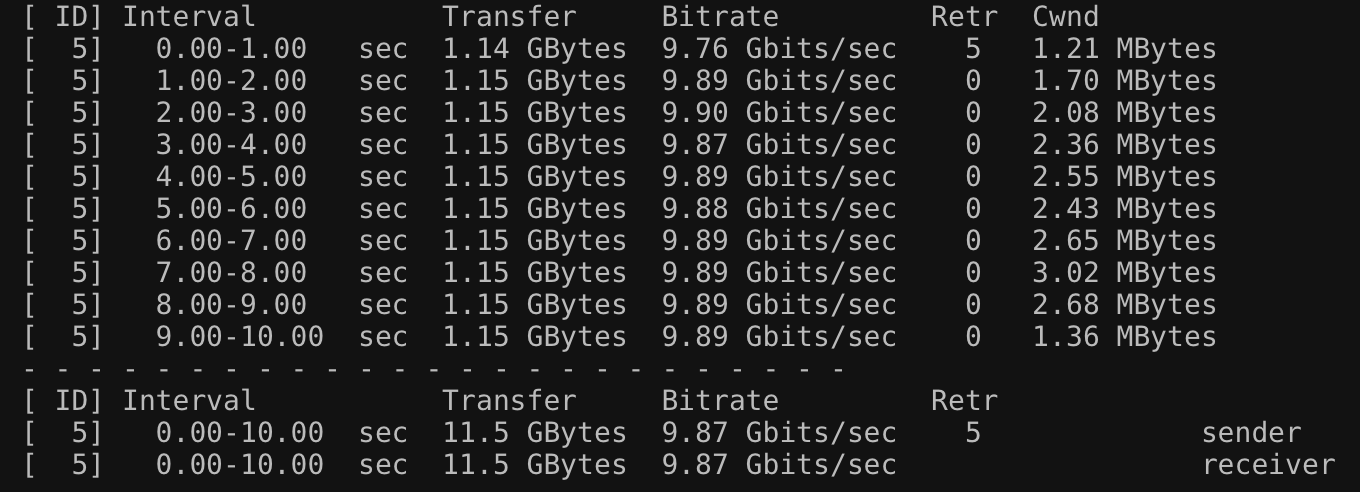

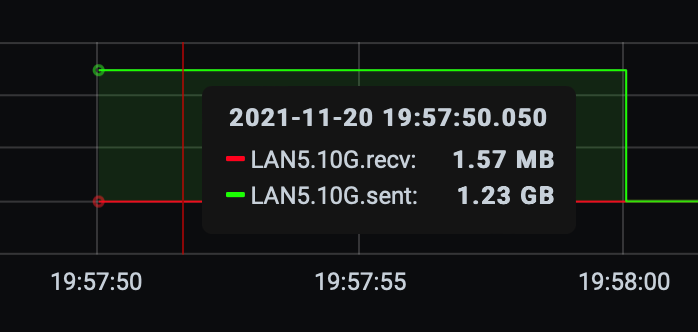

Using the iPerf tool, I also did a series of network transfer rate tests to get a slightly better picture of how the SA3400 itself behaves towards other devices on the network, as well as the virtual machines themselves used on VMM and ESXi hypervisors.

For starters, a simple 10G speed test between the SA3400 itself and the RS3614RPxs + NAS connected via a 10G switch. Without any big surprises, the speed was maximum. This is necessary to indicate that there is no physical bottleneck between the devices that participated in the tests.

Below are the results between virtual machines on the SA3400 VMM platform (both arrays), towards the physical RS3614RPxs + NAS as well as the same tests from the ESXi hypervisor.

Win10 (VMM) > RS3614RPxs+ EXOS7N8 10G 4.97Gbits/s

Win10 (VMM) > RS3614RPxs+ HAS5300 10G 4.83Gbits/s

Win10 (ESXi) > RS3614RPxs+ EXOS7N8 10G 3.25Gbits/s

Win10 (ESXi) > RS3614RPxs+ HAS5300 10G 3.23Gbits/s

As expected, the differences in speeds between these two sets of tests exist for the pure reason that in the first two results, virtual machines are located on a device that has both a hypervisor and specific virtual disks, while in the third and fourth tests virtual disks are on SA3400 NAS, and the hypervisor is a separate device in itself.

The next series included tests between virtual machines in separate arrays (HAS and EXOS) but within the same hypervisor.

Win10 (EXOS) > Win10 (HAS) VMM 10G 4.27Gbits/s

Win10 (HAS) > Win10 (EXOS) VMM 10G 3.79Gbits/s

Win10 (EXOS) > Win10 (HAS) ESXi 10G 2.06Gbits/s

Win10 (HAS) > Win10 (EXOS) ESXi 10G 2.20Gbits/s

The first two tests were performed on the Synology VMM hypervisor while the other two were on the ESXi. Again, as in the previous series, better results in the case of VMM than ESXi due to simpler configuration and "path length" in the communication itself.

As a final series, I did a test between virtual machines on separate hypervisors.

Win10 (VMM) > Win10 (ESXi) EXOS7N8 10G 2.44Gbits/s

Win10 (VMM) > Win10 (ESXi) HAS5300 10G 2.34Gbits/s

Similar results are visible as in the previous ESXi series, which means that this is where the specific "bottleneck" is. Whether this is due to the additional required configuration on the network cards remains to be seen. Even with the correction of the jumbo frames, I could not get any better results.

Anyway, solid speeds in one-on-one communication. I'm sure better speeds can certainly be expected when using more simultaneous connections, but in any case, the SA3400 works more than satisfactory.

Conclusion

In terms of virtualization, I can say that the SA3400 works great, and fast. The feeling of working inside virtual computers is solid and there are no problems after installing VirtIO support, and certainly when computers are accessed via an RDP client (Windows VM as an example). You best avoid working via the web console for any serious work because it is not designed for anything other than the initial configuration of the VM.

CPU and RAM in these situations will certainly not be a bottleneck, and it is likely that the use of SSDs will give even better results. In any case, SAS drives are more than enough to provide quality and stable performance, but if you can, in the case of a larger number of virtual machines, be sure to try using SSDs in your fields.

In the next, final article, I will turn more towards multimedia so we will test the operation of the Synology Photos application as well as Plex transcoding to see what exactly the limits of this CPU are in such situations.

Additionally, we will also see transfers via SMB and NFS between SA3400 and RS3614RPxs + devices.